Oracle Lab – GoldenGate 12c

1.环境准备

1.1.软件包

Oracle Linux 7.4 UEK(Unbreakable Enterprise Kernel)

V921569-01-7.4.0.0.0-x86_64.iso

GoldenGate 12.3.0.1.2 for Oracle on Linux x86-64

123012_fbo_ggs_Linux_x64_shiphome.zip

Database Software 12.1.0.2

linuxamd64_12102_database_1of2.zip

linuxamd64_12102_database_2of2.zip

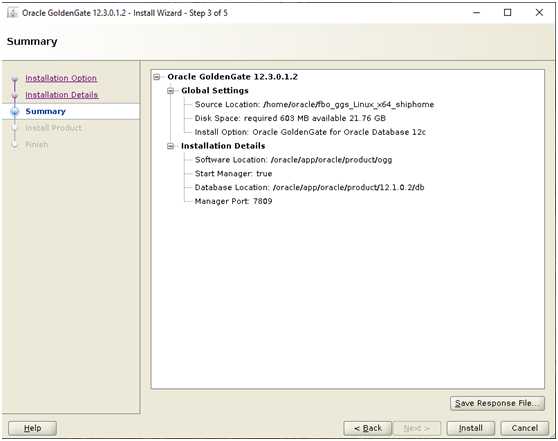

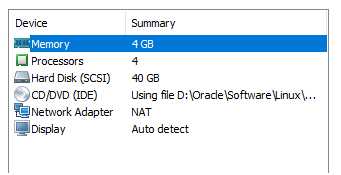

1.2.VMware配置

GG1 和 GG2 的配置一样,RAM 4GB,HDD 50GB

1.3.网络配置

1.4.Linux和Oracle 数据库安装

请参考相关的实验。

1.5.oracle user profile

export ORACLE_SID=MOORSE

export ORACLE_BASE=/oracle/app/oracle

export ORACLE_HOME=/oracle/app/oracle/product/12.1.0.2/db

export TNS_ADMIN=?ORACLE_HOME/network/admin

export OGG_HOME=/oracle/app/oracle/product/ogg

export PATH=?ORACLE_HOME/bin:?OGG_HOME:?PATH

export LD_LIBRARY_PATH=?ORACLE_HOME/lib:?OGG_HOME:?LD_LIBRARY_PATH

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export DISPLAY=localhost:10.0

export PS1='[\u@\h:`pwd`]?'

alias sqlplus='rlwrap sqlplus'

alias rman='rlwrap rman'

alias ggsci='rlwrap ggsci'

1.6.开启归档模式

启动到mount状态

设置归档日志路径

alter system set log_archive_dest_1='location=/oradata/arc';

打开归档模式

alter database archivelog;

alter database open;

修改日志文件命名格式:

alter system set log_archive_max_processes = 4;

alter system set log_archive_format = "arc_%t_%s_%r.dbf" scope=spfile;

1.7.创建测试数据

解锁scott用户:

alter user SCOTT account unlock;

alter user scott identified by tiger;

如果scott用户不存在,可以新建:

CREATE USER scott IDENTIFIED BY tiger

default tablespace USERS

temporary tablespace TEMP

quota unlimited on USERS;

grant connect , resource, create session, alter session to scott;

grant insert any table, update any table,delete any table, drop any table to scott;

grant create table, create sequence to scott;

-- 创建数据表

CREATE TABLE scott.dept (

deptno NUMBER(2) CONSTRAINT PK_DEPT PRIMARY KEY,

dname VARCHAR2(14) ,

loc VARCHAR2(13)

) ;

CREATE TABLE scott.emp (

empno NUMBER(4) CONSTRAINT PK_EMP PRIMARY KEY,

ename VARCHAR2(10),

job VARCHAR2(9),

mgr NUMBER(4),

hiredate DATE,

sal NUMBER(7,2),

comm NUMBER(7,2),

deptno NUMBER(2) CONSTRAINT FK_DEPTNO REFERENCES DEPT

);

CREATE TABLE scott.bonus (

enamE VARCHAR2(10) ,

job VARCHAR2(9) ,

sal NUMBER,

comm NUMBER

) ;

CREATE TABLE scott.salgrade (

grade NUMBER,

losal NUMBER,

hisal NUMBER

);

-- 插入测试数据 —— dept

INSERT INTO scott.dept VALUES (10,'ACCOUNTING','NEW YORK');

INSERT INTO scott.dept VALUES (20,'RESEARCH','DALLAS');

INSERT INTO scott.dept VALUES (30,'SALES','CHICAGO');

INSERT INTO scott.dept VALUES (40,'OPERATIONS','BOSTON');

-- 插入测试数据 —— emp

INSERT INTO scott.emp VALUES (7369,'SMITH','CLERK',7902,to_date('17-12-1980','dd-mm-yyyy'),800,NULL,20);

INSERT INTO scott.emp VALUES (7499,'ALLEN','SALESMAN',7698,to_date('20-2-1981','dd-mm-yyyy'),1600,300,30);

INSERT INTO scott.emp VALUES (7521,'WARD','SALESMAN',7698,to_date('22-2-1981','dd-mm-yyyy'),1250,500,30);

INSERT INTO scott.emp VALUES (7566,'JONES','MANAGER',7839,to_date('2-4-1981','dd-mm-yyyy'),2975,NULL,20);

INSERT INTO scott.emp VALUES (7654,'MARTIN','SALESMAN',7698,to_date('28-9-1981','dd-mm-yyyy'),1250,1400,30);

INSERT INTO scott.emp VALUES (7698,'BLAKE','MANAGER',7839,to_date('1-5-1981','dd-mm-yyyy'),2850,NULL,30);

INSERT INTO scott.emp VALUES (7782,'CLARK','MANAGER',7839,to_date('9-6-1981','dd-mm-yyyy'),2450,NULL,10);

INSERT INTO scott.emp VALUES (7788,'SCOTT','ANALYST',7566,to_date('13-07-87','dd-mm-yyyy')-85,3000,NULL,20);

INSERT INTO scott.emp VALUES (7839,'KING','PRESIDENT',NULL,to_date('17-11-1981','dd-mm-yyyy'),5000,NULL,10);

INSERT INTO scott.emp VALUES (7844,'TURNER','SALESMAN',7698,to_date('8-9-1981','dd-mm-yyyy'),1500,0,30);

INSERT INTO scott.emp VALUES (7876,'ADAMS','CLERK',7788,to_date('13-07-87','dd-mm-yyyy')-51,1100,NULL,20);

INSERT INTO scott.emp VALUES (7900,'JAMES','CLERK',7698,to_date('3-12-1981','dd-mm-yyyy'),950,NULL,30);

INSERT INTO scott.emp VALUES (7902,'FORD','ANALYST',7566,to_date('3-12-1981','dd-mm-yyyy'),3000,NULL,20);

INSERT INTO scott.emp VALUES (7934,'MILLER','CLERK',7782,to_date('23-1-1982','dd-mm-yyyy'),1300,NULL,10);

-- 插入测试数据 —— salgrade

INSERT INTO scott.salgrade VALUES (1,700,1200);

INSERT INTO scott.salgrade VALUES (2,1201,1400);

INSERT INTO scott.salgrade VALUES (3,1401,2000);

INSERT INTO scott.salgrade VALUES (4,2001,3000);

INSERT INTO scott.salgrade VALUES (5,3001,9999);

-- 事务提交

COMMIT;

2.安装GoldenGate

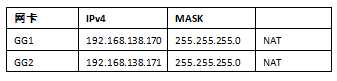

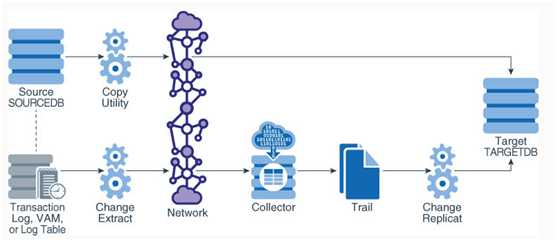

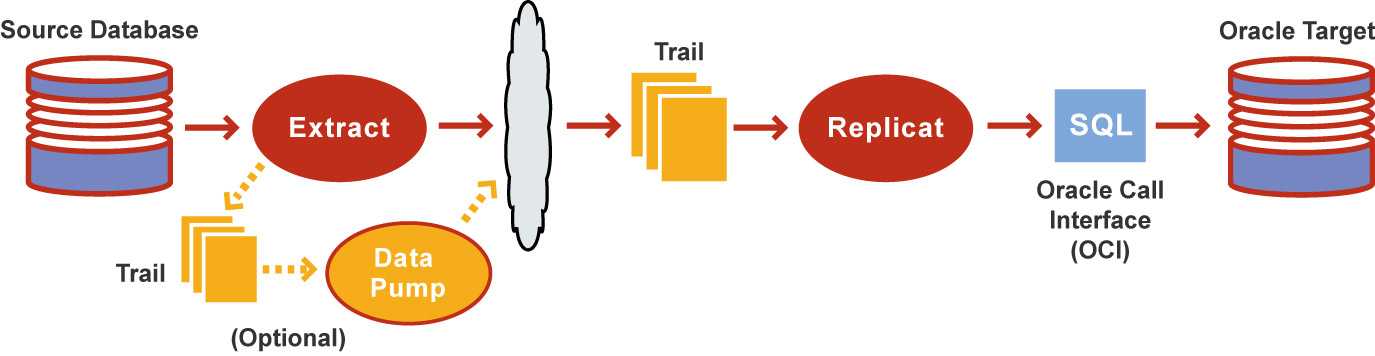

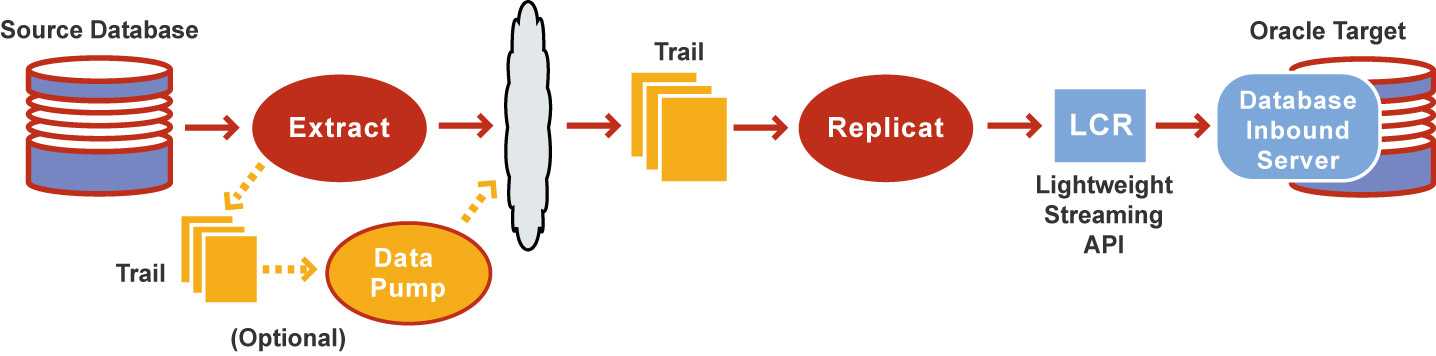

2.1.结构图

工作过程:首先extract在本地生成trail,然后datapump读取本地trail,发送到目标服务器。如果发生网络故障,extract进程仍然继续生成trail文件,datapump暂时停止传输,等待网络故障修复后,datapump重新启动传输,将堆积的本地trail文件发送至目标服务器。

2.2.创建安装目录

源端目录:

[oracle@gg1:/oracle/app/oracle/product]$mkdir ogg

目标端目录:

[oracle@gg2:/oracle/app/oracle/product]$mkdir ogg

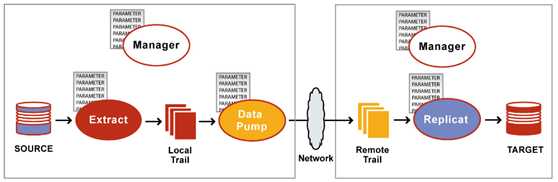

2.3.运行图形安装程序

[oracle@gg1:/home/oracle/fbo_ggs_Linux_x64_shiphome/Disk1]$./runInstaller

2.4.安装目录

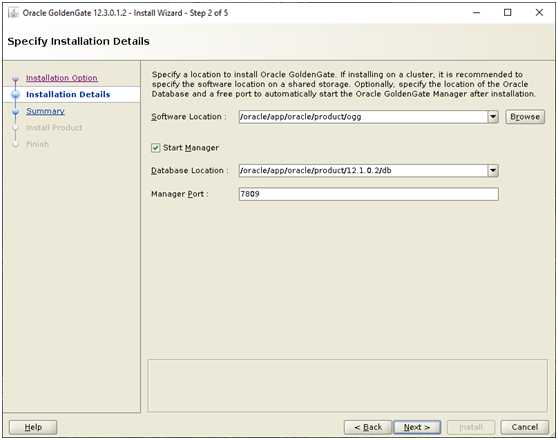

2.5.Summary

2.6.Install Progress

2.7.Finish

2.8.测试

[oracle@gg1:/oracle/app/oracle/product/ogg]$./ggsci

GGSCI (gg2) 1> view param mgr

PORT 7809

GGSCI (gg2) 2> info mgr

Manager is running (IP port gg2.7809, Process ID 29755).

3.配置GoldenGate 进程前准备

可以配置多个Extracts, Replicates进程:

extr01 -> pump01 ->rmttrail r01 rep01

extr02 -> pump02 -> rmttrail r02 rep02

3.1.创建GoldenGate管理用户

create tablespace OGG_DATA

datafile '/oradata/data/MOORSE/ogg_data.dbf'

size 100m autoextend on next 10m;

create user oggadmin identified by oggadmin

default tablespace OGG_DATA

temporary tablespace TEMP

quota unlimited on OGG_DATA;

grant connect,resource to oggadmin;

grant alter session to oggadmin;

grant select any dictionary, select any table to oggadmin;

grant insert any table, delete any table, drop any table, update any table to oggadmin;

grant create table, create sequence to oggadmin;

grant flashback any table to oggadmin;

grant select on v_$database to oggadmin;

grant select on sys.logmnr_buildlog to oggadmin;

grant select any transaction to oggadmin;

grant lock any table to oggadmin;

grant execute on dbms_flashback to oggadmin;

grant execute on dbms_logmnr_d to oggadmin;

grant execute on dbms_capture_adm to oggadmin;

grant execute on dbms_streams to oggadmin;

grant execute on utl_file to oggadmin;

grant execute on DBMS_XSTREAM_GG_ADM to oggadmin;

exec dbms_streams_auth.grant_admin_privilege('oggadmin');

exec DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE('oggadmin');

密码加密

GoldenGate管理用户登录数据库的用户名和密码,需要加密:

GGSCI (dbtrg) 1> encrypt password pwd ,ENCRYPTKEY default

Using Blowfish encryption with DEFAULT key.

Encrypted password: AACAAAAAAAAAAAIARFBCXDACYBXIVCND

Algorithm used: BLOWFISH

得到加密后的密码字符串,配置进程若使用加密过的密码,需要带参数(ENCRYPTKEY default)。

例如:

USERID oggadmin, PASSWORD AACAAAAAAAAAAAIARFBCXDACYBXIVCND, ENCRYPTKEY default

3.2.设置enable_goldengate_replication参数

oracle 11.2.0.4和oracle 12.1.0.2及之后的版本需要设置如下参数

SQL> alter system set enable_goldengate_replication=true scope=both;

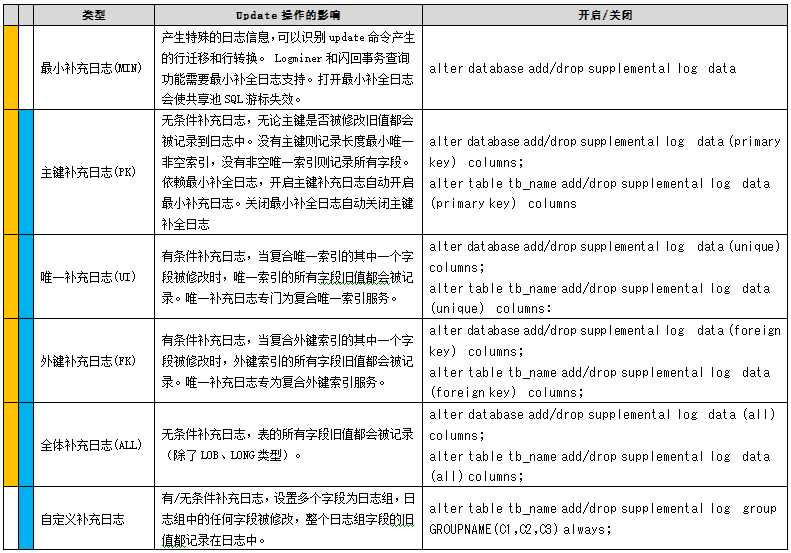

3.3.开启数据库附加日志

说明:

补充日志(Supplemental logging)分为三个级别:

1.Database level

2.Schema Level(12c才支持)

3.Table Level

补充日志(Supplemental logging)分为以下6种类型:

1. 最小补充日志(Minimal) (数据库级)

2. 全体补充日志(all)

3. 主键补充日志(primary key)

4. 唯一索引补充日志(unique index)

5. 外键补充日志(foreign key)

6. 自定义补充日志(表级)

数据库级的附加日志对数据库中的所有对象都有效,而表级附加日志则只对指定的表有效。而表级附加日志的开启的一个重要前提,就是要先打开数据级的最小附加日志,其命令就是:alter database add supplemental log data;

数据库级的最小附加日志是其它级别附加日志的基础。数据库级别补充日志可分为最小补充日志、主键补充日志、唯一索引补充日志、外键补充日志、全体补充日志5种。表级别补充日志可分为自定义补充日志,主键补充日志、唯一索引补充日志、外键补充日志、全体补充日志。表级别补充日志没有最小补充日志, 数据库级别补充日志没有自定义补充日志。

select SUPPLEMENTAL_LOG_DATA_MIN min,

SUPPLEMENTAL_LOG_DATA_PK pk,

SUPPLEMENTAL_LOG_DATA_UI ui,

SUPPLEMENTAL_LOG_DATA_FK fk,

SUPPLEMENTAL_LOG_DATA_ALL "all"

from v$database;

MIN PK UI FK all

-------- --- --- --- ---

YES NO NO NO NO

开启补充日志的命令:

alter database add supplemental log data (primary key) columns;

alter database add supplemental log data (unique) columns;

alter database add supplemental log data (foreign key) columns;

alter database add supplemental log data (all) columns;

alter database add supplemental log data;

alter table tb_name add supplemental log data (primary key) columns;

alter table tb_name add supplemental log data (unique) columns;

alter table tb_name add supplemental log data (foreign key) columns;

alter table tb_name add supplemental log data (all) columns;

alter table tb_name add supplemental log group group_name (col01,col03,col09) | always;

开启数据库级别的附加日志后,还需要开启用户级别或者表级别的附加日志,否则同步表的时候replicat进程会abended。

Internal GG error: missing key columns for statement (table scott.emp, io_type=15)

Aborting transaction on /ogg4oracle/dirdat/rt beginning at seqno 1 rba 56728

error at seqno 1 rba 56728

Problem replicating LILI.LOGMINER_TABLE to LILI.LOGMINER_TABLE

Mapping problem with compressed update record (target format)...

add trandata scott.salgrade

等效于sqlplus中执行:

alter table scott.salgrade add supplemental log data(all) columns;

用户级别的附加日志和表级别的附加日志,只需配置其中之一。如果只需要同步schema中的一些表,就不需要配置用户级别的附加日志,配置每张表的附加日志就可以了。

当某个表上无唯一键时,GoldenGate会尝试使用所有列的组合做为唯一键,但这样并不能保证一定可以唯一的表示表中的某一行。如果出现这种情况,需要为该表添加主键。

LOBs, LONGS, and ADTs等类型的列无法使用补充日志。

官方文档建议打开数据库最小附加日志+所有复制表的表级附加日志。测试而言,使用数据库的最小附加日志+PK是可以进行正常复制的,而数据库级的UI并没有给OGG的复制带来任何实质性帮助。可以使用数据库级的PK+UI级附加日志,但由于不是官方方案,请谨慎使用。

Oracle日志(redo log)一般用于实例恢复和介质恢复,但是如果需要靠日志还原完整的DML操作信息(比如GoldenGate),默认记录的日志量还不够。比如一个UPDATE的操作,默认redo只记录了rowid以及被修改的字段信息,但GoldenGate还原这个事务时,是在另一个库中进行还原,这时组成ROWID的四个部分(对象ID,数据文件ID,块号,行号)中,极可能是与原库不相同的,所以,不能根据ROWID来进行还原和处理。而要在SQL层面根据某个键值来定位记录,所以还需要将主键或者其他字段的信息附加到日志中去。要往日志中增加这些额外字段信息的操作,就是开启附加日志,即Add Supplemental Logging。打开附加日志,会使数据库的日志量和负载增加,所以,应该根据需要,只打开相应的级别的附加日志。

数据库级别的附加日志

SQL> shutdown immediate;

SQL> startup mount;

SQL> alter database force logging;

SQL> alter database add supplemental log data;

SQL> SELECT supplemental_log_data_min, force_logging FROM v$database;

SUPPLEME FORCE_LOGGING

-------- ---------------------------------------

YES YES

如果开启DDL复制,最好直接在数据库级别都打开:

alter database add supplemental log data (primary key,unique,foreign key,all) columns;

数据库级别的附加日志

SQL> shutdown immediate;

SQL> startup mount;

SQL> alter database force logging;

SQL> alter database add supplemental log data;

SQL> SELECT supplemental_log_data_min, force_logging FROM v$database;

SUPPLEME FORCE_LOGGING

-------- ---------------------------------------

YES YES

如果开启DDL复制,最好直接在数据库级别都打开:

alter database add supplemental log data (primary key,unique,foreign key,all) columns;

用户级别的附加日志

GoldenGate管理用户oggadimn必须具有执行DBMS_CAPTURE_ADM包的权限, 可以在GGSCI命令行下登录到数据库为其它的用户开启附加日志。

grant execute on sys.dbms_job to public;

或者:

grant execute on sys.dbms_job to scott;

GGSCI>dblogin userid oggadmin,password oggadmin

add schematrandata scott

2020-03-15 21:09:45 INFO OGG-01788 SCHEMATRANDATA has been added on schema "scott".

2020-03-15 21:09:45 INFO OGG-01976 SCHEMATRANDATA for scheduling columns has been added on schema "scott".

取消附加日志:

delete schematrandata

表级别的附加日志

如果没有启用用户级别的附加日志,必须启用表级别的主键或唯一索引附加日志,即使启用了用户级别的附加日志,也可以启用表级别的附加日志使主键附加日志替代每一个在用户级别指定的键,在表没有主键或唯一索引时,必须启用表级别的附加日志。

当表有主键或唯一索引时,使用此命令启用表级别的附加日志,如果表中没有主键或唯一索引时则必须指定一个或多个或者全部列做为主键。scott.emp表的主键是PK_EMP。

GGSCI>dblogin userid oggadmin,password oggadmin

add trandata scott.emp

3.4.配置检查点

GGSCI>dblogin userid oggadmin,password oggadmin

add checkpointtable oggadmin.checkpoint

edit param ./GLOBALS

GGSCHEMA oggadmin

CHECKPOINTTABLE oggadmin.checkpoint

define allow_invisible_index_keys =‘TRUE’

注意:Integrated capture模式无需配置Checkpoint.

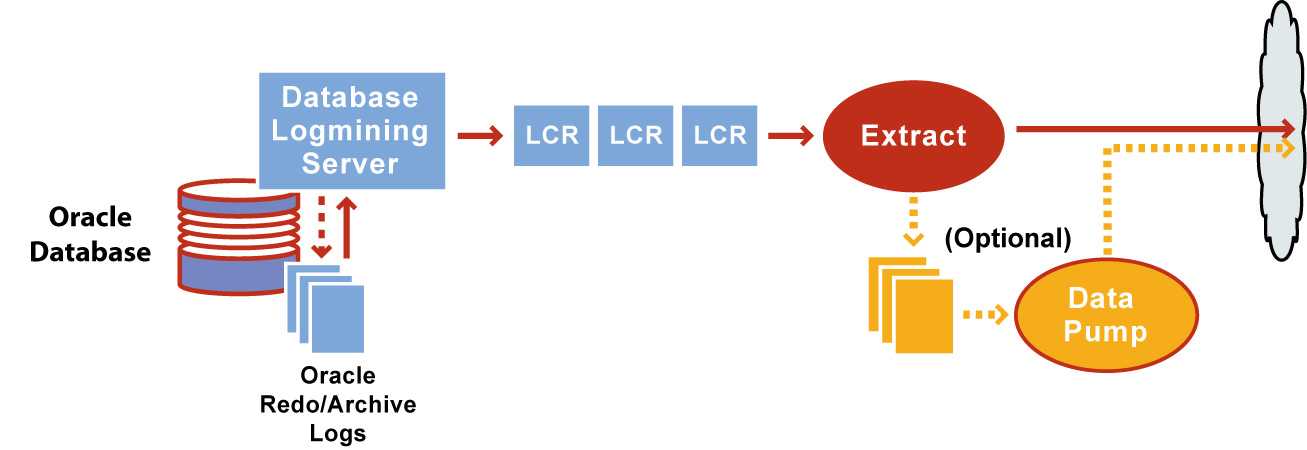

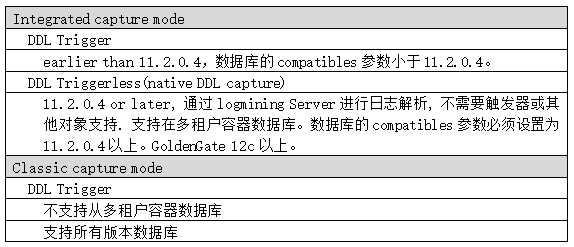

4.集成抽取模式和传统抽取模式

可以选其中一种进行试验,或者两种一起。GoldenGate 支持混合模式。

4.1.抽取模式说明

GoldenGate从11.2版本开始提出了Integrated模式,使用了stream相关技术。对于Source为Oracle的数据库,Extract 支持classic capture 和 integrated capture mode两种模式.

Classic capture mode: 是以前用的从联机或归档日志中直接挖掘交易日志的方法。

Integrated capture:

源端:

不再使用GoldenGate用户去挖掘信息,而是使用Logmining Server(OUT bound Server)进程读数据中的日志信息,转换生成Logical Change records(LCR)文件, extract进程再将读取这个LCR文件把数据存入trail文件。

目标端:

读取源端传输过来的Trail files,执行DATA数据过滤和转换操作,Replicate通过Lightweight Streaming API和目标库后台进程Database IN bound Server建立连接,将LCR传输到Inbound Server,然后apply这些数据到目标数据库中。

GoldenGate User -> Logmining Server -> Logical Change records(LCR)-> Trail files -> Remote Trail -> Data filter -> Lightweight Streaming API -> Database INbound Server -> Apply

4.2.Integrated Capture的优势

相对Classic侵入性更高一些,与Oracle结合度更高一些

兼容性上支持更多的数据类型(IOT、compression、XML、LOB)

无需为RAC、ASM、TDE、RMAN做额外的配置

多线程抽取(producer thread: capture redo;consumer thread:process redo),并发处理过程的性能提升

OGG12c对oracle 12c 多租户的捕获(必须集成抽取模式才能支持)

DDL无需trigger(OGG12c、DB11204)

Integrated capture uses the database logmining server to access the Oracle redo stream, with the benefit of being able to automatically switch between different copies of archive logs or different mirrored versions of the online logs. Thus integrated capture can transparently handle the unavailability of a log file caused by disk corruption, hardware failure, or operator error, assuming that additional copies of the archived and online logs are available

Integrated capture enables faster filtering of tables.

Integrated capture handles point-in-time recovery and RAC integration more efficiently.

Integrated capture features integrated log management. The Oracle Recovery Manager (RMAN) automatically retains the archive logs that are needed by Extract.

利用Oracle自身的API,并行性能高,默认4个线程同时运行。

Classic时需把一些大表做range方式进行表分区,或把一些比较繁忙的表独立放到一个进程里,配置Integrated方式后自动处理。

Integrated capture模式无需配置Checkpoint信息

4.3.Integrated 模式的部署方式和相关视图

local部署

source database 和mining database是同一个库,源库和集成抽取进程在同一台主机,无需额外配置。

downstream部署

source database 和mining database是同一平台的不同数据库,需要把source数据库的redo传输到mining database上来,需要额外的配置,但会降低对source数据库的性能影响。源库将归档日志传送给 downstream数据库。

real-time mining, mining DB和Source DB一对一,同时需要在mining DB上创建额外的standby redo log,接收源数据库借助Data Guard redo transport发送的redo。

mining DB和Source DB和一对多的关系,不需要在mining DB上创建standby redo log, 所有的Extract都是从archived logs中挖掘数据。

混和部署

For Oracle RDBMS 11.2.0.3 with the patch for bug 13560925, you can use either integrated capture, classic capture, or a combination of the two modes. You can divide your tables between two or more Extracts in different capture modes depending on the attributes and data types of the tables. The Oracle GoldenGate parameter files, trails, conversion capabilities, mapping options, and replication mechanisms are fundamentally the same in both modes.

相关视图:

dba_capture

gv&goldengate_capture

v&logmnr_session

v&logmnr_stats

dba_xstream_outband_process

gv&xstream_outband_server

v&gg_apply_*

DBA_APPLY_*

4.4.两种模式之间的转换

Integrated Capture模式和Classic Capture模式之间的转换:

ALTER EXTRACT 命令可以实现这两种模式之间的转换,前提是必须先用REGISTER EXTRACT 注册这个primary Extract group.

REGISTER EXTRACT有2个用途(只能针对Priamry Extract Group)

Enable integrated capture mode.

Enable Extract in classic capture mode to work with Oracle Recovery Manager to retain the archive logs needed for recovery.

Example 1 REGISTER EXTRACT ext01 LOGRETENTION

Example 2 REGISTER EXTRACT ext01 DATABASE

GGSCI (oggs) 7> info ext01 upgrade

Extract EXT01 is ready to be upgraded to integrated capture.

GGSCI (oggs) 8>

转换方法

UPGRADE INTEGRATED TRANLOG 从classic capture mode 到 integrated capture mode

ALTER EXTRACT finance, UPGRADE INTEGRATED TRANLOG

DOWNGRADE INTEGRATED TRANLOG 从integrated capture mode 到 classic capture mode

ALTER EXTRACT finance, DOWNGRADE INTEGRATED TRANLOG

4.5.Integrated capture mode调优建议

Integrated capture是通过logmining server在mining database对源数据库日志进行挖掘的,对资源的开销主要由2个部分组成:一是进程数,二是分配的Shared Memory. 在OGG中通过如下参数对这两个资源进行控制:

TRANLOGOPTIONS INTEGRATEDPARAMS (max_sga_size 200, parallelism 3)

(1)max_sga_size的单位是M

(2)Shared Memory从Streams pool中分配

(3)缺省情况下,一个integrated capture Extract分配的资源是(max_sga_size 1024, parallelism 2). 所以如果在一个database中运行3个integrated capture Extract,至少需要3G的Shared Memory,同时要考虑25%的内存预留,所以建议的stream_pool_size >= 3GB + (3GB * 0.25) = 3.75 GB

4.6.DDL同步配置

Extract can capture DDL operations from a source Oracle Database through the use of a special DDL trigger or natively through the Oracle logmining server. Which of these methods you can use depends on the Extract capture mode and the version of the source Oracle Database.

GoldenGate目前只支持Oracle和Teradata的DDL复制。

DDL复制和DML复制是相互独立的,可以只同步DDL,只同步DML,同步DDL和DML。

DDL语句长度限制为4MB,如果DDL语句的长度大于支持的长度,extract会生成一个告警信息并且忽略这个DDL操作。使用基于DDL触发器的捕获模式,被忽略的DDL存储在marker table中。使用ddl_ddl2file.sql脚本,可以像捕获其他DDL一样捕获被忽略的DDL语句,这个脚本会把DDL操作保存在USER_DUMP_DEST目录下的text file中。

基于Trigger的DDL同步原理:

与DML同步不同,DDL复制并不是简单得读取日志文件,然后生成trail文件进行传输。而是通过触发器的方式实现的。

当一条DDL语句执行后,会触发全局DDL Trigger,触发器会将所执行的DDL语句和其他一些记录写到GoldenGate的用户表里。

然后GoldenGate进程再从该这些表中获取该语句,写到Trail文件中, 再进行传输。

安装步骤:

1. 新创建一个独立的表空间,设置为自动扩展,能适应GGS_DDL_HIST和GGS_MARKER表的不断增大

2. 建立一个用户(schema) oggadmin,赋予utl_file执行权限

3. 编辑params.sql脚本,设置ddl_fire_error_in_trigger为TRUE。如果表空间满了,extract停止捕获DDL,避免DDL遗漏。扩展表空间后继续捕获DDL。

4. 创建或编辑GLOBALS文件,添加DDL schema:GGSCHEMA schema_name

5. 为了使DDL复制能够识别到不可见索引,需要在params.sql脚本中设置如下信息:define allow_invisible_index_keys = ‘TRUE’

6. 退出所有oracle会话,包括sqlplus,应用程序,OGG进程,和其他使用oracle的软件,避免开启新的会话。

7. 以拥有SYSDBA权限的用户登录,创建DDL触发器。

8. 运行marker_setup.sql。

9. 运行ddl_setup.sql(如果其他用户在使用这个表空间,则脚本报错。如果没有开启自动扩展,不会报错。)

10. 运行role_setup.sql,这个脚本删除并重建DDL同步需要的角色,并且赋予DDL对象DML权限。

11. 将创建的角色赋予需要执行extract的用户。如果有多个用户,需要执行多次。

12. 使DDL触发器生效。

13. 为了改善DDL触发器的性能,执行ddl_pin脚本。

5.配置GoldenGate 进程 – Classic Capture

源端Extract:Classic模式

目标端Replicat:Classic模式

5.1.DDL配置

源端和目标端执行DDL配置脚本:

#关闭回收站10G,11G以后不需要关闭

alter system set recyclebin=off scope=spfile;

#重启数据库

shutdown immediate

startup force;

[oracle@gg1:/home/oracle]cdOGG_HOME

[oracle@gg1:/oracle/app/oracle/product/ogg]$sqlplus / as sysdba

@marker_setup

@ddl_setup

@role_setup

GRANT GGS_GGSUSER_ROLE TO oggadmin;

@ddl_enable

@ddl_pin.sql

提示输入用户:oggadmin

原理:在源端建触发DDL,将捕获的DDL存入oggadmin.GGS_MARKER Table,再类似普通表把数据传递到目标Trail,然后逐条抽取执行,可执行且成功的插入目标GGS_MARKER Table。

5.2.源端配置MGR进程

GGSCI>info mgr

Manager is DOWN!

GGSCI>edit param mgr

PORT 7809

DYNAMICPORTLIST 7810-7820

AUTOSTART EXTRACT *

AUTORESTART EXTRACT *, RETRIES 5, WAITMINUTES 2

PURGEOLDEXTRACTS /oracle/app/oracle/product/ogg/dirdat/*, USECHECKPOINTS, MINKEEPDAYS 5

LAGREPORTHOURS 1

LAGINFOMINUTES 30

LAGCRITICALMINUTES 45

PORT通信端口7809, 源端和目标端需要保持一致。

DYNAMICPORTLIST当制定端口被占用或者出现通信故障,管理进程将会从列表中选择下一个端口尝试连接,避免通信端口的单点故障。

AUTOSTART EXTRACT当MGR进程启动后启动EXTRACT进程

AUTORESTART EXTRACT当EXTRACT进程中断后尝试自动重启,每隔2分钟尝试启动一次,尝试5次。

PURGEOLDEXTRACTS定期清理dirdat路径下的本地队列(local trail)。保留期5天,过期后自动删除。控制队列文件的目录不会增长过大。

LAGREPORTHOURS每隔一小时检查各进程延时情况,并记录到goldengate report文件。

LAGINFOMINUTES进程复制延时超过30分钟,向日志文件记录一条错误日志

LAGCRITICALMINUTES传输延时超过45分钟将写入警告日志

启动,停止,查看mgr进程:

start mgr

stop mgr

info mgr

5.3.源端配置Extract进程

add extract extr01,tranlog,begin now

extr01将捕获数据库变更的数据并将这些数据保存到trail文件里, 需要配置trail文件的目录和文件名。trail文件名有8个字符,只需要制定2个字符,其余6个字符由GoldenGate填充。

ADD EXTTRAIL /oracle/app/oracle/product/ogg/dirdat/ex, EXTRACT extr01

配置extr01的参数

edit param extr01

extract extr01

SETENV(ORACLE_SID=MOORSE)

SETENV(NLS_LANG=AMERICAN_AMERICA.AL32UTF8)

USERID oggadmin, PASSWORD AACAAAAAAAAAAAIARFBCXDACYBXIVCND, ENCRYPTKEY default

REPORTCOUNT EVERY 30 MINUTES, RATE

DISCARDFILE /oracle/app/oracle/product/ogg/dirrpt/exterr.dsc, APPEND, MEGABYTES 1024

DISCARDROLLOVER AT 1:00

EXTTRAIL /oracle/app/oracle/product/ogg/dirdat/ex

DBOPTIONS ALLOWUNUSEDCOLUMN

FETCHOPTIONS NOUSESNAPSHOT

FETCHOPTIONS FETCHPKUPDATECOLS

DDL include OBJNAME scott.*

ddloptions report

table scott.*;

GGSCI (gg1 as oggadmin@MOORSE) 40> info extr01

EXTRACT EXTR01 Last Started 2020-03-18 11:34 Status RUNNING

Checkpoint Lag 00:00:00 (updated 00:00:04 ago)

Process ID 6503

Log Read Checkpoint Oracle Redo Logs

2020-03-18 22:20:13 Seqno 30, RBA 10633216

SCN 0.1833094 (1833094)

REPORTCOUNT每隔30分钟报告一次从程序开始到现在的抽取进程或者复制进程的事物记录数,并汇报进程的统计信息

DISCARDFILE将执行失败的记录保存在discard file中,discard file文件记录了GoldenGate进程错误、数据库错误、GoldenGate操作等信息。该文件位于/oracle/app/oracle/product/ogg/dirrpt/extsr.dsc, 大小为1024MB。 文件中已经包含记录的话,再后面继续追加,不删除之前的记录。

DISCARDROLLOVER为了防止discard file被写满,每天1:00AM做一次文件过期设定

EXTTRAIL队列文件路径, trail文件存放路径。

DYNAMICRESOLUTION有时候开启OGG进程的时候较慢,可能是因为需要同步的表太多,OGG在开启进程之前会将需要同步的表建立一个记录并且存入到磁盘中,这样就需要耗费大量的时间。使用该参数来解决此问题。此参数已经不用了。

DBOPTIONS ALLOWUNUSEDCOLUMN 用于阻止抽取进程抽取数据时由于表含有unused列而导致进程异常终止(abend)。使用该参数,抽取进程抽取到unused列时也会向日志文件记录一条警告信息。

FETCHOPTIONS NOUSESNAPSHOT 默认值为 usesnapshot,表示利用数据库闪回读取数据。Nousesnapshot表示直接从原表读取相关数据。

FETCHOPTIONS FETCHPKUPDATECOLS 当使用了HANDLECOLLISIONS时,请使用该参数。复制进程出现丢失update记录(missing update)并且更新的是主键,update将转换成insert。由于插入的记录可能不是完整的行,若要保证完整需要加入此参数。

DDL 参数: Use the DDL parameter to: enable DDL support, filter DDL operations, configure a processing action based on a DDL record.

When used without options, the DDL parameter performs no filtering, and it causes all DDL operations to be propagated as follows:

As an Extract parameter, it captures all supported DDL operations that are generated on all supported database objects and sends them to the trail.

As a Replicate parameter, it replicates all DDL operations from the Oracle GoldenGate trail and applies them to the target. This is the same as the default behavior without this parameter.

如果归档存放在ASM

TRANLOGOPTIONS ASMUSER sys@ASM,ASMPASSWORD xxxx

5.4.源端配置Data Pump进程

如果不使用Data Pump,extract进程发送抽取捕获的变更数据到remote trail,如果配置了Data Pump,extract进程抽取捕获数据写入到local trail,Data pump读取local trail并且通过网络发送到remote trail,data pump 加强了源端和目标端抽取捕获数据的可用性,

1. 保护网络传输失败和目标端失败;

2. 可以实现复杂的数据过滤和转换;

3. 可以结合多个数据源到目标端;

4. 可以同步一个源数据到多个目标端。

创建Data Pump指定local trail文件位置,EXTTRAILSOUCE 指定local trail文件位置和主抽取进程(extr01)中指定的trail目录和trail文件命必须一致,因为Data Pump进程要从此读取主抽取进程生成的trail文件。

ADD EXTRACT pump01, EXTTRAILSOURCE /oracle/app/oracle/product/ogg/dirdat/ex

edit param pump01

extract pump01

SETENV(ORACLE_SID=MOORSE)

SETENV (NLS_LANG=AMERICAN_AMERICA.AL32UTF8)

USERID oggadmin, PASSWORD AACAAAAAAAAAAAIARFBCXDACYBXIVCND, ENCRYPTKEY default

RMTHOST gg2, mgrport 7809, COMPRESS

RMTTRAIL /oracle/app/oracle/product/ogg/dirdat/tr

table scott.*;

RMTHOST 目标端主机地址,管理进程端口号,投递前压缩队列文件

PASSTHRU 表示传输进程直接跟抽取进程交互,而不再和数据库进行交互,减少数据库资源的利用。

RMTTRAIL 目标端保存队列文件的目录

DYNAMICRESOLUTION 动态解析表名

指定Data Pump进程发送trail文件到目标端的位置(目标端trail文件添加到队列中)

add rmttrail /oracle/app/oracle/product/ogg/dirdat/tr, extract pump01

源端可以配置多个主抽取进程,也可以配置多个Data Pump进程,但必须为每个要同步的目标端配置一个Data Pump进程。

DYNAMICRESOLUTION 此参数已经不用了。

5.5.目标端配置MGR进程

edit param mgr

PORT 7809

USERID oggadmin, PASSWORD AACAAAAAAAAAAAIARFBCXDACYBXIVCND, ENCRYPTKEY default

DYNAMICPORTLIST 7809-7820

AUTOSTART REPLICAT *

AUTORESTART REPLICAT *, RETRIES 5, WAITMINUTES 2

PURGEOLDEXTRACTS /oracle/app/oracle/product/ogg/dirdat/*, USECHECKPOINTS, MINKEEPDAYS 5

PURGEDDLHISTORY MINKEEPDAYS 7, MAXKEEPDAYS 10

PURGEMARKERHISTORY MINKEEPDAYS 7, MAXKEEPDAYS 10

LAGREPORTHOURS 1

LAGINFOMINUTES 30

LAGCRITICALMINUTES 45

PORT 通信端口7809, 源端和目标端需要保持一致。

DYNAMICPORTLIST 当制定端口被占用或者出现通信故障,管理进程将会从列表中选择下一个端口尝试连接,避免通信端口的单点故障。

AUTOSTART REPLICAT 当MGR进程启动后启动REPLICAT进程

AUTORESTART REPLICAT 当REPLICAT进程中断后尝试自动重启,每隔2分钟尝试启动一次,尝试5次。

PURGEDDLHISTORY 删除DDL历史表,最小保存7天,最大保存10天。

LAGREPORTHOURS 每隔一小时检查各进程延时情况,并记录到goldengate report文件。

LAGINFOMINUTES 进程复制延时超过30分钟,向日志文件记录一条错误日志

LAGCRITICALMINUTES 传输延时超过45分钟将写入警告日志

5.6.目标端配置Replicat进程

配置检查点

Checkpoint存储从文件读取和写入的检查点位置,用于还原和恢复数据,Checkpoint确保发生变化并提交(commit)的数据被extract抽取捕获和被replicat进程应用到目标端, 保证在系统、网络或者GoldenGate需要重启进程时发生的错误不会导致数据丢失, 在复杂的同步配置中checkpoints启用多个extract和replicat进程从同一个trail集中读取数据。

GGSCI>dblogin userid oggadmin,password oggadmin

add checkpointtable oggadmin.checkpoint

edit param ./GLOBALS

GGSCHEMA oggadmin

CHECKPOINTTABLE oggadmin.checkpoint

define allow_invisible_index_keys ='TRUE'

首次编辑GoldenGate配置文件时,GLOBALS文件以大写命名并且没有扩展名, 创建在$OGG_HOME目录

配置复制进程

Replicat进程读取tail文件和重构DML、DDL并应用到目标数据库, Replicat编译SQL一次,当变量值不同时重复使用编译过的SQL, Replicat进程可以像extract进程一样配置初始化数据加载(直接从数据源中加载静态的数据)和在某个时间点后源端与服务端变更数据同步(从在线日志或归档日志抽取捕获变更的数据);

add replicat rep01, exttrail /oracle/app/oracle/product/ogg/dirdat/tr, checkpointtable oggadmin.checkpoint

edit param rep01

REPLICAT rep01

SETENV(ORACLE_SID=MOORSE)

SETENV(NLS_LANG=AMERICAN_AMERICA.AL32UTF8)

USERID oggadmin, PASSWORD AACAAAAAAAAAAAIARFBCXDACYBXIVCND, ENCRYPTKEY default

REPORT AT 06:00

REPORTCOUNT EVERY 30 MINUTES, RATE

REPORTROLLOVER AT 02:00

REPERROR DEFAULT, ABEND

ALLOWNOOPUPDATES

ASSUMETARGETDEFS

HANDLECOLLISIONS

DISCARDFILE /oracle/app/oracle/product/ogg/dirrpt/reperr.dsc, APPEND, MEGABYTES 1024

DISCARDROLLOVER AT 02:00

DDL include OBJNAME scott.*

ddloptions report

DDLERROR DEFAULT IGNORE RETRYOP MAXRETRIES 3 RETRYDELAY 5

MAP scott.*, target scott.*;

REPORT 每天06:00定期生成一个report文件

REPORTCOUNT 每隔30分钟报告一次从程序开始到现在的抽取进程或者复制进程的事物记录数,并汇报进程的统计信息

REPORTROLLOVER 为了防止report file被写满,每天2:00做一次文件过期设定

REPERROR 除了特殊指定的REPERROR语句,报告所有复制期间出现的错误,回滚非正常中断的事物和进程。遇到不能处理的错误就自动abend,启动需要人工干预处理

ALLOWNOOPUPDATES 当源表有排除列情况或者有目标表不存在的列时,当更新这列goldengate默认报错。应用该参数后,即可让goldengate生成一条警告信息而不是报错。

ASSUMETARGETDEFS 使用ASSUMETARGETDEFS参数时,用MAP语句中指定的源表和目标表具有相同的列结构。它指示的Oracle GoldenGate不在源端查找源表的结构定义。

HANDLECOLLISIONS 用于goldengate自动过滤不出来的冲突记录,为了严格保证数据一致性

DISCARDFILE 将执行失败的记录保存在discard file中,discard file文件记录了GoldenGate进程错误、数据库错误、GoldenGate操作等信息。该文件位于./dirrpt/repsa.dsc,大小为1024MB。 文件中已经包含记录的话,再后面继续追加,不删除之前的记录。

DISCARDROLLOVER 为了防止discard file被写满,每天2:00做一次文件过期设定

DDLERROR DLL错误处理,否则DLL失败后,replicate将失败

MAP 对应需要复制的对象,默认一一对应传输进程

GGSCI (gg2) 4> info REP01

REPLICAT REP01 Last Started 2020-03-18 22:43 Status ABENDED

Checkpoint Lag 10:51:08 (updated 00:01:27 ago)

Log Read Checkpoint File /oracle/app/oracle/product/ogg/dirdat/tr000000002

2020-03-18 11:52:26.948061 RBA 16860

5.7.删除进程

dblogin userid oggadmin,password oggadmin

delete rep02

6.配置GoldenGate 进程 – Integrated Capture

源端Extract:Integrated模式

目标端Replicat:Integrated模式

6.1.源端配置MGR进程

GGSCI>info mgr

Manager is DOWN!

GGSCI>edit param mgr

PORT 7809

DYNAMICPORTLIST 7810-7820

AUTOSTART EXTRACT *

AUTORESTART EXTRACT *, RETRIES 5, WAITMINUTES 3

PURGEOLDEXTRACTS /oracle/app/oracle/product/ogg/dirdat/*, USECHECKPOINTS, MINKEEPDAYS 3

LAGREPORTHOURS 1

LAGINFOMINUTES 30

LAGCRITICALMINUTES 45

If you hit this error:

GGSCI (olinux97) 3> start mgr

Process creation error: Cannot find executable file './mgr'

solution:

You need to launch GGSCI only from the OGG_HOME and not from anywhere else in the server.

cd $OGG_HOME

ggsci

start mgr

6.2.源端配置Extract进程

dblogin userid oggadmin,password oggadmin

register extract extr02 database

add extract extr02, integrated tranlog, begin now

add exttrail /oracle/app/oracle/product/ogg/dirdat/dx, extract extr02

配置extr02的参数

edit param extr02

extract extr02

SETENV(ORACLE_SID=MOORSE)

SETENV(NLS_LANG=AMERICAN_AMERICA.AL32UTF8)

USERID oggadmin, PASSWORD AACAAAAAAAAAAAIARFBCXDACYBXIVCND, ENCRYPTKEY default

REPORTCOUNT EVERY 30 MINUTES, RATE

DISCARDFILE /oracle/app/oracle/product/ogg/dirrpt/exterr.dsc, APPEND, MEGABYTES 1024

DISCARDROLLOVER AT 1:00

WARNLONGTRANS 2HOURS, CHECKINTERVAL 5MINS

EXTTRAIL /oracle/app/oracle/product/ogg/dirdat/dx

cachemgr cachesize 512M

DBOPTIONS ALLOWUNUSEDCOLUMN

ddl include all

ddloptions report

statoptions resetreportstats

tranlogoptions excludeuser oggadmin

tranlogoptions integratedparams (max_sga_size 1024, parallelism 1)

table flight.*;

GGSCI (gg1 as oggadmin@MOORSE) 39> info extr02

EXTRACT EXTR02 Last Started 2020-03-18 22:19 Status RUNNING

Checkpoint Lag 00:06:57 (updated 00:00:07 ago)

Process ID 48142

Log Read Checkpoint Oracle Integrated Redo Logs

2020-03-18 22:12:56

SCN 0.0 (0)

WARNLONGTRANS : Use the WARNLONGTRANS parameter to specify a length of time that a transaction can be open before Extract generates a warning message that the transaction is long-running. Also use WARNLONGTRANS to control the frequency with which Oracle GoldenGate checks for long-running transactions.

When WARNLONGTRANS is specified, Oracle GoldenGate checks for transactions that satisfy the specified threshold, and it reports the first one that it finds to the Oracle GoldenGate error log, the Extract report file, and the system log. By default, Oracle GoldenGate repeats this check every five minutes.

6.3.源端pump进程

ADD EXTRACT pump02, EXTTRAILSOURCE /oracle/app/oracle/product/ogg/dirdat/dx

edit param pump02

extract pump02

SETENV(ORACLE_SID=MOORSE)

SETENV (NLS_LANG=AMERICAN_AMERICA.AL32UTF8)

USERID oggadmin, PASSWORD AACAAAAAAAAAAAIARFBCXDACYBXIVCND, ENCRYPTKEY default

RMTHOST gg2, mgrport 7809, COMPRESS

RMTTRAIL /oracle/app/oracle/product/ogg/dirdat/ur

table flight.*;

add rmttrail /oracle/app/oracle/product/ogg/dirdat/ur, extract pump02

6.4.目标端MGR进程

edit param mgr

PORT 7809

USERID oggadmin, PASSWORD AACAAAAAAAAAAAIARFBCXDACYBXIVCND, ENCRYPTKEY default

DYNAMICPORTLIST 7809-7820

AUTOSTART REPLICAT *

AUTORESTART REPLICAT *, RETRIES 5, WAITMINUTES 2

PURGEOLDEXTRACTS /oracle/app/oracle/product/ogg/dirdat/*, USECHECKPOINTS, MINKEEPDAYS 5

PURGEDDLHISTORY MINKEEPDAYS 7, MAXKEEPDAYS 10

PURGEMARKERHISTORY MINKEEPDAYS 7, MAXKEEPDAYS 10

LAGREPORTHOURS 1

LAGINFOMINUTES 30

LAGCRITICALMINUTES 45

6.5.目标端Replicate进程

增加Relicate进程

add replicat rep02, integrated exttrail /oracle/app/oracle/product/ogg/dirdat/ur

配置rep02参数

edit param rep02

REPLICAT rep02

SETENV(ORACLE_SID=MOORSE)

SETENV(NLS_LANG=AMERICAN_AMERICA.AL32UTF8)

USERID oggadmin, PASSWORD AACAAAAAAAAAAAIARFBCXDACYBXIVCND, ENCRYPTKEY default

REPORT AT 02:30

REPORTCOUNT EVERY 30 MINUTES, RATE

REPORTROLLOVER AT 02:00

REPERROR DEFAULT, ABEND

DBOPTIONS DEFERREFCONST

ALLOWNOOPUPDATES

ASSUMETARGETDEFS

HANDLECOLLISIONS

DISCARDFILE /oracle/app/oracle/product/ogg/dirrpt/reperr.dsc, APPEND, MEGABYTES 1024

DISCARDROLLOVER AT 02:00

DDL include all

ddloptions report

DDLERROR DEFAULT IGNORE RETRYOP MAXRETRIES 3 RETRYDELAY 5

MAP flight.*, target flight.*;

dblogin userid oggadmin,password oggadmin

REGISTER REPLICAT rep02 DATABASE

GGSCI (gg2) 2> info REP02

REPLICAT REP02 Last Started 2020-03-18 22:32 Status RUNNING

INTEGRATED

Checkpoint Lag 00:00:00 (updated 00:00:09 ago)

Process ID 49056

Log Read Checkpoint File /oracle/app/oracle/product/ogg/dirdat/ur000000000

2020-03-18 22:39:01.002130 RBA 3827

6.6.删除进程

dblogin userid oggadmin,password oggadmin

delete rep02

7.目标端数据的初始化

To use a database copy utility to establish the target data, you start a change-synchronization Extract group to extract ongoing data changes while the database utility makes and applies a static copy of the data. When the copy is finished, you start the change-synchronization Replicat group to re-synchronize rows that were changed while the copy was being applied. From that point forward, both Extract and Replicat continue running to maintain data synchronization. This method does not involve any special initial-load Extract or Replicat processes.

DML操作包括INSERT、UPDATE、DELETE、SELECT操作,而在这些操作中UPDATE、DELETE操作Redo只记录了变更的数据列以及行ID(ROWID),GoldenGate抽取数据后将其转换为自己的格式发送都目标端,假设源端有100条数据,从08:00 AM开始同步到目标端,而在同步开始前目标端没有初始化数据(目标端为空数据),那么08:00 AM后事物产生的UPDATE、DELETE DML操作发送到目标端,目标端GoldenGate Replicat进程会因为找不到数据而报错从而导致Replicat进程崩溃停止(ABENDED),所以这就需要我们在同步前初始化数据,初始化完后再同步,这样大大降低错误率。同步数据的方式可以通过DBLINK、EXP/IMP、SQLLDR或者表空间迁移等方式同步。

基于SCN号导出源端数据到目标端数据库,确认所有errors都被解决。

为了不会丢失任何数据,在初始化之前,必须查看数据库里是否还存在长事务。可以通过如下命令查看数据库事务的开始时间,并筛选出最小的开始时间,看是否大于Extract进程的启动时间,需要等到其开始时间大于Extract进程的启动时间后才能进行初始化。因为OGG只获取Extract启动后的变化数据。(如果是RAC环境需要查询GV$TRANSACTION视图)。

select min(start_time) from v$transaction;

select sysdate,dbms_flashback.get_system_change_number || '' from dual;

数据初始化之前先建一个测试表,测试抽取进程,Pump进程,Replicate进程,保证没有问题。然后停止Replicate进程,保持抽取进程开启进行数据初始化,初始化完成后,以after CSN的方式打开Replicate进程。

7.1.Data Pump

这种方法初始化存在一个问题,在初始化数据过程中会对undo造成比较大的压力,尤其是大型数据库。可以通过分割的Datapump来实现数据的分组同步,分散Undo的压力,然后将分组的Dump Data合并即可, 优点就是可以跨平台和跨版本初始化。

FLASHBACK_SCN 参数用于指定导出特定SCN时刻的数据。

设置Pump目录

select * from dba_directories;

create or replace directory DATA_PUMP_DIR as '/bck/pump';

查询SCN

select sysdate,dbms_flashback.get_system_change_number || '' from dual;

SYSDATE DBMS_FLASHBACK.GET_SYSTEM_CHANGE_NUMBER|

------------------ ----------------------------------------

18-MAR-20 1683113

导出数据

expdp \'sys/oracle as sysdba\' schemas=SCOTT, directory=DATA_PUMP_DIR dumpfile=Moorse0317a.dmp logfile=expdp0317a.log flashback_scn=1683113

拷贝到目标主机,导入数据

impdp \'sys/oracle as sysdba\' schemas=SCOTT, directory=DATA_PUMP_DIR table_exists_action=replace dumpfile=Moorse0317a.dmp logfile=impdp0317a.log

table_exists_action参数值:

1) skip:默认操作

2) replace:先drop表,然后创建表,最后插入数据

3) append:在原来数据的基础上增加数据

4) truncate:先truncate,然后再插入数据

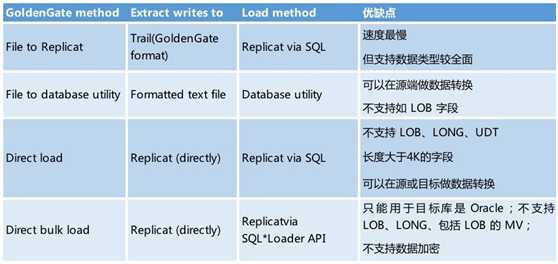

7.2.Direct Initial Load方式

支持跨数据库平台初始化。不支持LOB和LONG类型的字段、速度比较慢。如果是跨数据库平台,如SQLSERVER到ORACLE,可以选用OGG自带的迁移功能GoldenGate Initial Load。

Direct Initial load 常用于用于异构环境的初始化数据,也可以用于某些生产环境到测试环境的数据同步。初始化的同步过程一般只需要在 GoldenGate 上配置即可无需 DBA 进行参与,使用 Direct Initial load 处理某些数据表不一致后重新同步也非常方便,仅需要将想要同步表配置如参数启动进程即可完成表数据库的同步。

Extract 和 Replicat 进程的两种工作模式

– Initial Load — 初始数据的加载( special run、 batch run) 一般用于异构环境下的数据加载,初始化过程文件可以落地也可以选择不落地,抽取的方式就是将需要初始化的数据查询出来保存成 OGG 或者目标数据库可以识别的格式。

– Change Data Capture (CDC) — 增量数据的抽取 配合初始化数据库,完成整个初始化中增量数据的同步。

initial load 的四种工作模式

目标端数据落地

- File to replicat (Extract writes to a file that Replicat applies) 该方式利用 OGG 的 Initial Load Extract 进程将需要抽取的数据先进行解析生成 OGG 的 Trail 文件,然后将解析生成的文件传输到目标端主机的 OGG 指定的目录里,再由 OGG 的 Replicat 进行解析插入到目标数据库的对应表里。

-

File to database utility (Extract writes to a file formatted for a DB bulk loadutility) 该方式源端进程解析数据库数据,通过 FORMATASCII 参数控制抽取最终生成目标端数据库可以识别使用的 ASCII 文件,也就是文本文件。目标端使用自己数据库提供的数据加载程序进行数据加载完成初始数据,这种方式加载数据一般应用于异构的环境,比如 Oracle 到 IBM 数据库之间加载,Oracle 到 SQL Server 间。

目标端数据不落地

- Direct Load (Extract sends data directly to Replicat) 采用不落地的方式 Initial load extract 和 Initial load replicat 进程之间直接进行传输数据,在目标端没有 collertor 进程负责收集源端发送的 tail 文件。而目标端落地方式当 mgr 进程启动的时候会自动启动一个 collector 进程进行接收源端 pump 进程传输过来的 tail 文件。

Direct load 的配置方式如下:

ADD EXTRACT <name>, SOURCEISTABLE Extract parameters: RMTTASK REPLICAT, GROUP <name> ADD REPLICAT <name>,SPECIALRUN

两边的 group name 要对应上

- Direct bulk load (Replicat uses Oracle SQL*Loader API) 这种方式仅仅支持 Oracle 间的同步,同样也不会落地文件 Initial load replicat 进程直接调用 SQL*LOADER API进行数据入库,复制端使用 bulkload 参数。

参考Direct Initial Load实验

7.3.数据库备份恢复方式(RMAN)

检查抽取进程,保持开启状态, Pump进程不需要开启。

不要配置目标端的GoldenGate进程,数据库恢复会覆盖掉配置好的checkpoint表格和oggadmin表空间。

恢复数据完成后再配置目标端Replicate进程。

7.3.1.Performing full online backup of the source database

Pre-backup actions

SQL> select dbid from vdatabase;

DBID

----------

586816054

SQL> select 'target datafile '||file_id||' to '||'''/oradata/data/MOORSE/temp01.dbf'''||';' from dba_temp_files;

target datafile 1 to '/oradata/data/MOORSE/temp01.dbf';

SQL> select 'set newname for datafile '||file# ||' to '''||'/u01/linora'||''';' from vdatafile;

1 /oradata/data/MOORSE/system01.dbf

2 /oradata/data/MOORSE/flight_data.dbf

3 /oradata/data/MOORSE/sysaux01.dbf

4 /oradata/data/MOORSE/undotbs01.dbf

5 /oradata/data/MOORSE/ogg_data.dbf

6 /oradata/data/MOORSE/users01.dbf

#Copy the password from source to target

scp ORACLE_HOME/dbs/orapwMOORSE gg2:ORACLE_HOME/dbs/

#Record source redo log location

SQL> SELECT member FROM gv$logfile;

MEMBER

--------------------------------------------------------------------------------

/oradata/data/MOORSE/redo03.log

/oradata/data/MOORSE/redo02.log

/oradata/data/MOORSE/redo01.log

Performing full backup

cat full_backup.sh

#!/bin/bash

BK_TIME=`date +"20%y%m%d-%H%M%S"`

BK_PATH=/bck

rman log=BK_PATH/full_bk_BK_TIME.log append <<EOF

connect target /;

run{

allocate channel c1 device type disk;

allocate channel c2 device type disk;

crosscheck backup;

sql 'alter system archive log current';

backup database format 'BK_PATH/db_%d_%T_%U'; sql 'alter system archive log current';

backup archivelog all format 'BK_PATH/arc_%t_%s' delete all input;

backup current controlfile format 'BK_PATH/cntrl_%s_%p_%s'; backup spfile format 'BK_PATH/spf_%U';

release channel c1;

release channel c2;

}

crosscheck archivelog all;

crosscheck backupset;

delete noprompt obsolete;

delete noprompt expired backup;

delete noprompt archivelog all completed before 'sysdate-3';

delete force noprompt archivelog all completed before 'sysdate-7';

delete noprompt backup of database completed before 'sysdate - 30';

list backup summary;

EOF

Retrieving the SCN after backing up the database and scp the backup images to target host

SYSDATE DBMS_FLASHBACK.GET_SYSTEM_CHANGE_NUMBER|

------------------ ----------------------------------------

19-MAR-20 1845914

SQL> select group#,sequence#,members,status,first_change#,next_change# from gv$log;

GROUP# SEQUENCE# MEMBERS STATUS FIRST_CHANGE# NEXT_CHANGE#

---------- ---------- ---------- ---------------- ------------- ------------

1 34 1 CURRENT 1844991 2.8147E+14

2 32 1 INACTIVE 1844943 1844983

3 33 1 INACTIVE 1844983 1844991

We use the largest SCN of the inactive member 1844991

7.3.2.Restoring the database to target system

Modifying the initial parameter file

mkdir -p /oracle/app/oracle/admin/MOORSE/adump

mkdir -p /oradata/fra/MOORSE/

mkdir -p /oradata/arch/MOORSE/

Starting up the instance to nomount mode

RMAN> shutdown immediate

RMAN> startup nomount

Restoring the control file and mount the database

RMAN> restore controlfile from '/bck/rman/20200319-205839/ctl_08urgnol_1_1_20200319_MOORSE.bak';

Starting restore at 19-MAR-20

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=246 device type=DISK

channel ORA_DISK_1: restoring control file

channel ORA_DISK_1: restore complete, elapsed time: 00:00:01

output file name=/oradata/data/MOORSE/control01.ctl

output file name=/oradata/data/MOORSE/control02.ctl

Finished restore at 19-MAR-20

RMAN> sql 'alter database mount';

sql statement: alter database mount

released channel: ORA_DISK_1

Cataloging the backup images

RMAN> catalog start with '/bck/rman/20200319-205839';

Starting implicit crosscheck backup at 19-MAR-20

RMAN-00571: ===========================================================

RMAN-00569: =============== ERROR MESSAGE STACK FOLLOWS ===============

RMAN-00571: ===========================================================

RMAN-03002: failure of catalog command at 03/19/2020 21:18:07

RMAN-12010: automatic channel allocation initialization failed

RMAN-06189: current DBID 586816411 does not match target mounted database (586816054)

数据库不是空的所以会出错,删除所有的数据文件包括控制文件,归档日志文件,flashback文件, Audit文件 /oracle/app/oracle/admin/MOORSE/adump

RMAN> catalog start with '/bck/rman/20200319-205839';

Starting implicit crosscheck backup at 19-MAR-20

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=169 device type=DISK

Crosschecked 7 objects

Finished implicit crosscheck backup at 19-MAR-20

Starting implicit crosscheck copy at 19-MAR-20

using channel ORA_DISK_1

Finished implicit crosscheck copy at 19-MAR-20

searching for all files in the recovery area

cataloging files...

no files cataloged

searching for all files that match the pattern /bck/rman/20200319-205839

List of Files Unknown to the Database

=====================================

File Name: /bck/rman/20200319-205839/ctl_08urgnol_1_1_20200319_MOORSE.bak

File Name: /bck/rman/20200319-205839/spf_09urgnon_1_1.bak

Do you really want to catalog the above files (enter YES or NO)? yes

cataloging files...

cataloging done

List of Cataloged Files

=======================

File Name: /bck/rman/20200319-205839/ctl_08urgnol_1_1_20200319_MOORSE.bak

File Name: /bck/rman/20200319-205839/spf_09urgnon_1_1.bak

Restoring and recovering the database

RMAN> restore database;

Starting restore at 19-MAR-20

using channel ORA_DISK_1

channel ORA_DISK_1: starting datafile backup set restore

channel ORA_DISK_1: specifying datafile(s) to restore from backup set

channel ORA_DISK_1: restoring datafile 00001 to /oradata/data/MOORSE/system01.dbf

channel ORA_DISK_1: restoring datafile 00002 to /oradata/data/MOORSE/flight_data.dbf

channel ORA_DISK_1: restoring datafile 00006 to /oradata/data/MOORSE/users01.dbf

channel ORA_DISK_1: reading from backup piece /bck/rman/20200319-205839/dbk_2_1_02urgno1_1_1_20200319_MOORSE.bak

channel ORA_DISK_1: piece handle=/bck/rman/20200319-205839/dbk_2_1_02urgno1_1_1_20200319_MOORSE.bak tag=TAG20200319T205841

channel ORA_DISK_1: restored backup piece 1

channel ORA_DISK_1: restore complete, elapsed time: 00:00:03

channel ORA_DISK_1: starting datafile backup set restore

channel ORA_DISK_1: specifying datafile(s) to restore from backup set

channel ORA_DISK_1: restoring datafile 00003 to /oradata/data/MOORSE/sysaux01.dbf

channel ORA_DISK_1: restoring datafile 00004 to /oradata/data/MOORSE/undotbs01.dbf

channel ORA_DISK_1: restoring datafile 00005 to /oradata/data/MOORSE/ogg_data.dbf

channel ORA_DISK_1: reading from backup piece /bck/rman/20200319-205839/dbk_1_1_01urgno1_1_1_20200319_MOORSE.bak

channel ORA_DISK_1: piece handle=/bck/rman/20200319-205839/dbk_1_1_01urgno1_1_1_20200319_MOORSE.bak tag=TAG20200319T205841

channel ORA_DISK_1: restored backup piece 1

channel ORA_DISK_1: restore complete, elapsed time: 00:00:03

Finished restore at 19-MAR-20

Recover the database from specify SCN 1844991:

RMAN> recover database until scn 1844991;

Starting recover at 19-MAR-20

using channel ORA_DISK_1

starting media recovery

media recovery complete, elapsed time: 00:00:00

Finished recover at 19-MAR-20

Check the file header and the control file SCN

SQL> select checkpoint_change# from vdatafile;

CHECKPOINT_CHANGE#

------------------

1844991

1844991

1844991

1844991

1844991

1844991

6 rows selected.

SQL> select checkpoint_change# from vdatafile_header;

CHECKPOINT_CHANGE#

------------------

1844991

1844991

1844991

1844991

1844991

1844991

6 rows selected.

SQL> alter database open resetlogs;

If we don't have temp tablespace, we need to create the TBS:

cd /oradata/data/MOORSE

check if there is temp file

SQL> create temporary tablespace TEMP tempfile ' /oradata/data/MOORSE' size 200M;

SQL> alter database default temporary tablespace TEMP;

SQL> alter database open resetlogs;

--using spfile instead pfile

SQL> create spfile from pfile;

SQL> shutdown immediate

SQL> startup

7.3.3.Configuring the OGG on the target system

8.启动进程和测试

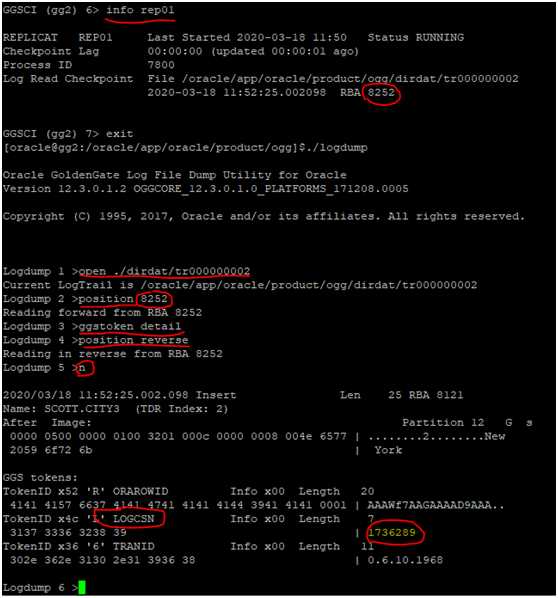

8.1.LOGCSN

CSN(Commit Sequence Number)有别于我们之前常听说的SCN(System Change Number),但是它们在功能上是可互换的。

GoldenGat在Trail文件中引入了一个机制,它保存了CSN, 在GoldenGate中叫LOGCSN。它对应到数据库里面的SCN。 LOGCSN可以可以在logdump中进行查看,它只存在于Translnd 0(开始事务)或3(单操作事务),如果一个事务包含多个操作,那么第一个操作必须含有一个TransID0,表示开始事务,同一事物下在之后的操作将包含Translnd 1,最后在结束该事务将包含一个TransID2,如果事务只有一个操作,那么此操作就会只包含一个TransID3。在每个文件的最后会记录一个LOGCSN号,如果LOGCSN号大于你记录的SCN号 说明队列文件中这个交易记录是在你导出数据后发生的,如果LOGCSN号小于你记录的SCN号 说明队列文件中这个交易记录是在你导出数据之前发生的。

可以在启动Replicat进程时指定LOGCSN号,应用我们希望开始应用更改的数据。LOGCSN号对应到数据库里面的系统改变号SCN号,在备份源库之前我们应该记录下当前数据库的SCN号,在目标库应用日志时就从当前这个SCN号开始应用。

如果取的SCN正好对应的是COMMIT操作:

ATCSN \

AFTERCSN \

如果取的SCN不是对应的commit操作:

不管是用ATCSN或AFTERCSN参数,Replicat进程都会把这个SCN号之前未完成的事务重新应用。在初始化恢复目标库时会回滚掉未提交的事务,所以不会出现数据重复。

建议使用AFTERCSN,不管在哪种情况下都能保证数据一致。

8.2.启动进程

start mgr

info all

stats extr01

stats pump01

view report extr01

view report pump01

info extr01

info pump01

数据初始化后,分别启动源端MGR进程、主抽取进程(Primary Extract)、Data Pump进程(Secondly Extract)以及目标端MGR进程、Replicat进程。

在目标端基于SCN号启动replicat进程

start replicat rep01,aftercsn 1845914

start replicat rep02,aftercsn 1845914

确认replicat进程中数据已经追平。

停止源端、目标端的OGG进程,迁移完成。

数据库备份恢复方式初始化数据后,启动进程:

恢复数据覆盖了之前配置好的进程,所以出现问题。应该是恢复数据之后配置进程。

2020-03-19 22:42:31 ERROR OGG-00446 Checkpoint table oggadmin.checkpoint does not exist. Create this table with t

he ADD CHECKPOINTTABLE command.

2020-03-19 22:42:31 ERROR OGG-01668 PROCESS ABENDING.

GGSCI (gg2 as oggadmin@MOORSE) 26> info rep01

REPLICAT REP01 Last Started 2020-03-18 22:53 Status ABENDED

Checkpoint Lag 00:00:00 (updated 23:36:09 ago)

Log Read Checkpoint File /oracle/app/oracle/product/ogg/dirdat/tr000000002

2020-03-18 22:57:52.646585 RBA 18345

2020-03-19 22:49:08 ERROR OGG-01668 PROCESS ABENDING.

alter REPLICAT rep01,extseqno 1,extrba 18345

2020-03-19 22:56:11 INFO OGG-06594 Replicat REP01 has been altered. Even the start up position might be updated, duplicate suppression remains active in next startup. To override duplicate suppression, start REP01 with NOFILTERDUPTRANSACTIONS option.

REPLICAT altered.

start replicat rep01,aftercsn 1845914

2020-03-19 22:59:48 ERROR OGG-00446 No data selecting position from checkpoint table oggadmin.checkpoint for group 'REP02', key 3472867307 (0xceffbfeb), SQL <

>.

2020-03-19 22:59:48 ERROR OGG-01668 PROCESS ABENDING.

GGSCI (gg2 as oggadmin@MOORSE) 36> info rep02

REPLICAT REP02 Last Started 2020-03-18 22:57 Status ABENDED

INTEGRATED

Checkpoint Lag 00:00:00 (updated 23:40:01 ago)

Log Read Checkpoint File /oracle/app/oracle/product/ogg/dirdat/ur000000000

2020-03-18 22:58:24.002309 RBA 4215

alter REPLICAT rep02,extseqno 1,extrba 4215

GGSCI (gg2 as oggadmin@MOORSE) 41> alter REPLICAT rep02,extseqno 1,extrba 4215

2020-03-19 23:08:59 INFO OGG-06594 Replicat REP02 has been altered. Even the start up position might be updated, duplicate suppression remains active in next startup. To override duplicate suppression, start REP02 with NOFILTERDUPTRANSACTIONS option.

REPLICAT (Integrated) altered.

GGSCI (gg2 as oggadmin@MOORSE) 42> start replicat rep02,aftercsn 1845914

Sending START request to MANAGER ...

REPLICAT REP02 starting

2020-03-19 23:10:57 ERROR OGG-02513 REPLICAT REP02 is not registered with the database.

2020-03-19 23:10:57 ERROR OGG-01668 PROCESS ABENDING.

GGSCI (gg2 as oggadmin@MOORSE) 46> dblogin userid oggadmin,password oggadmin

Successfully logged into database.

GGSCI (gg2 as oggadmin@MOORSE) 47> REGISTER REPLICAT rep02 DATABASE

2020-03-19 23:16:41 INFO OGG-02528 REPLICAT REP02 successfully registered with database as inbound server OGG$REP02.

DELETE REPLICAT rep02

UNREGISTER REPLICAT rep02 DATABASE

start replicat rep01,aftercsn 1845914

8.3.测试DDL

Scott schema:

create table city2(id varchar(20), name varchar(30),primary key(id));

create city2(id varchar(20), name varchar(30),primary key(id));

insert into city values('3','Vancouver');

Flight schema:

create table city (id varchar(10), name varchar(50), primary key (id));

insert into city values('1', 'Toronto');

INSERT INTO scott.dept VALUES (50,'MANAGEMENT','DENVOR');

INSERT INTO scott.dept VALUES (60,'DEVELOPMENT','SEATTLE');

查看replicat的SCN

ggsci

ggsci> info <replicat group> , showch

不是所有的replicat 都可以通过这种方式找到SCN

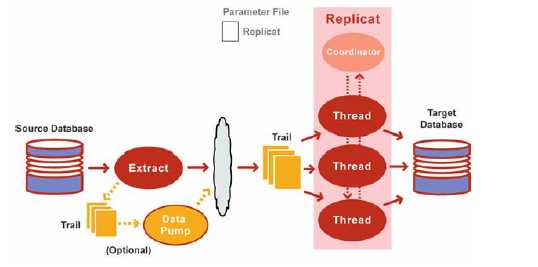

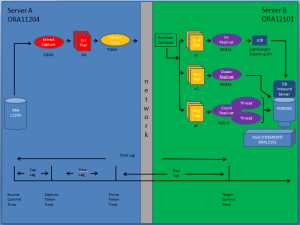

9.GoldenGate 12 – Classic, Coordinated and Integrated Replicat

Prior to the release of version 12.1, the replicat applied transactions to the target database serially. To achieve parallelism, tables could be split across multiple replicats to get data applied quicker and reduce lag. Drawbacks to this approach was the manual configuration of multiple replicats, trail files, and the limitation of not be able to split tables that had foreign key relationships across replicats.

The new Coordinated and Integrated Replicats can help you achieve transactions being applied in parallel on the target database without having to manually split out tables into multiple replicats.

Let’s take a look at the difference in the replicat modes.

9.1.Replicat Modes

Applies SQL serially.

Must be used if the database version is < 11.2.0.4

The configured replicat is called the coordinator. It starts additional replicats based on number of threads specified. The replicat name is limited to 5 characters and the threaded replicats will have this name appended with a 3 digit number ( RGGMA, RGGMA001, RGGMA002, … ). The coordinator sends SQL to the threads, which can be applied in parallel. The SQL is applied in committed order.

- Constructs logical change records (LCR) that represent source database DML transactions (in committed order). DDL is applied directly by Replicat. Transactions can be applied in parallel.

- Attaches to a background process in the target database known as a database inbound server by means of a lightweight streaming interface

- Transmits the LCRs to the inbound server, which applies the data to the target database

9.2.Configuration

Let’s setup one extract that will feed a heartbeat table into the three different replicat types. Here a diagram.

A. Parameter Files

Integrated Capture

EXTRACT cgga

SETENV (ORACLE_HOME="/u01/app/oracle/product/11.2.0.4")

SETENV (ORACLE_SID="ORA11204")

SETENV (NLS_LANG="AMERICAN_AMERICA.WE8MSWIN1252")

USERID c##ogg, PASSWORD ogg

TRANLOGOPTIONS MININGUSER c##ogg, MININGPASSWORD ogg

TRANLOGOPTIONS INTEGRATEDPARAMS (max_sga_size 200, parallelism 1)

LOGALLSUPCOLS

DISCARDFILE ./dirrpt/CGGA.dsc, APPEND Megabytes 100M

INCLUDE ./dirprm/gg_hb_capture.mac

EXTTRAIL ./dirdat/GA

#gg_hb_capture ();

Pump

EXTRACT pgga

SETENV (ORACLE_HOME="/u01/app/oracle/product/11.2.0.4")

SETENV (ORACLE_SID="ORA11204")

SETENV (NLS_LANG="AMERICAN_AMERICA.WE8MSWIN1252")

USERID c##ogg, PASSWORD ogg

DISCARDFILE ./dirrpt/PGGA.dsc, APPEND Megabytes 100M

RMTHOST db1.vm.com, MGRPORT 7812

INCLUDE ./dirprm/gg_hb_pump.mac

--

RMTTRAIL ./dirdat/a1

NOPASSTHRU

#gg_hb_pump ();

--

RMTTRAIL ./dirdat/a2

NOPASSTHRU

#gg_hb_pump ();

--

RMTTRAIL ./dirdat/a3

NOPASSTHRU

#gg_hb_pump ();

Classic Replicat

REPLICAT rgga1

SETENV (ORACLE_HOME="/u01/app/oracle/product/12.1.0.1")

SETENV (ORACLE_SID="ORA12101")

SETENV (NLS_LANG="AMERICAN_AMERICA.WE8MSWIN1252")

UserId c##ogg@pdbogg, Password ogg

ASSUMETARGETDEFS

DBOPTIONS NOSUPPRESSTRIGGERS

DBOPTIONS DEFERREFCONST

DISCARDFILE ./dirrpt/RGGA1.dsc, append, megabytes 100

INCLUDE ./dirprm/gg_hb_classic_replicat.mac

#gg_hb_classic_replicat ();

Coordinated Replicat

REPLICAT rgga2

SETENV (ORACLE_HOME="/u01/app/oracle/product/12.1.0.1")

SETENV (ORACLE_SID="ORA12101")

SETENV (NLS_LANG="AMERICAN_AMERICA.WE8MSWIN1252")

UserId c##ogg@pdbogg, Password ogg

ASSUMETARGETDEFS

DBOPTIONS NOSUPPRESSTRIGGERS

DBOPTIONS DEFERREFCONST

DISCARDFILE ./dirrpt/RGGA2.dsc, append, megabytes 100

INCLUDE ./dirprm/gg_hb_coordinated_replicat.mac

#gg_hb_coordinated_replicat (1);

Integrated Replicat

REPLICAT rgga3

SETENV (ORACLE_HOME="/u01/app/oracle/product/12.1.0.1")

SETENV (ORACLE_SID="ORA12101")

SETENV (NLS_LANG="AMERICAN_AMERICA.WE8MSWIN1252")

UserId c##ogg@pdbogg, Password ogg

ASSUMETARGETDEFS

DBOPTIONS NOSUPPRESSTRIGGERS

DBOPTIONS DEFERREFCONST

DISCARDFILE ./dirrpt/RGGA3.dsc, append, megabytes 100

INCLUDE ./dirprm/gg_hb_integrated_replicat.mac

#gg_hb_integrated_replicat ();

Macros

$ cat gg_hb_classic_replicat.mac

MACRO #gg_hb_classic_replicat

BEGIN

MAP ggmon.ggmon_heartbeat, TARGET ggmon.classic_heartbeat, &

COLMAP (USEDEFAULTS &

, SOURCE_COMMIT = @GETENV ('GGHEADER', 'COMMITTIMESTAMP') &

, TARGET_COMMIT = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

, CAP_SOURCE_COMMIT = @TOKEN('CAP_SOURCE_COMMIT') &

, CAPGROUP = @TOKEN ('CAPGROUP') &

, CAPTIME = @TOKEN ('CAPTIME') &

, PMPGROUP = @TOKEN ('PMPGROUP') &

, PMPTIME = @TOKEN ('PMPTIME') &

, REPGROUP = @GETENV ('GGENVIRONMENT', 'GROUPNAME') &

, REPTIME = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

);

MAP ggmon.ggmon_heartbeat, TARGET ggmon.classic_heartbeat_history, &

INSERTALLRECORDS &

, COLMAP (USEDEFAULTS &

, SOURCE_COMMIT = @GETENV ('GGHEADER', 'COMMITTIMESTAMP') &

, TARGET_COMMIT = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

, CAP_SOURCE_COMMIT = @TOKEN('CAP_SOURCE_COMMIT') &

, CAPGROUP = @TOKEN ('CAPGROUP') &

, CAPTIME = @TOKEN ('CAPTIME') &

, PMPGROUP = @TOKEN ('PMPGROUP') &

, PMPTIME = @TOKEN ('PMPTIME') &

, REPGROUP = @GETENV ('GGENVIRONMENT', 'GROUPNAME') &

, REPTIME = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

);

END;

$ cat gg_hb_coordinated_replicat.mac

MACRO #gg_hb_coordinated_replicat

PARAMS (#thread)

BEGIN

MAP ggmon.ggmon_heartbeat, TARGET ggmon.coordinated_heartbeat, THREAD (#thread) &

, &

COLMAP (USEDEFAULTS &

, SOURCE_COMMIT = @GETENV ('GGHEADER', 'COMMITTIMESTAMP') &

, TARGET_COMMIT = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

, CAP_SOURCE_COMMIT = @TOKEN('CAP_SOURCE_COMMIT') &

, CAPGROUP = @TOKEN ('CAPGROUP') &

, CAPTIME = @TOKEN ('CAPTIME') &

, PMPGROUP = @TOKEN ('PMPGROUP') &

, PMPTIME = @TOKEN ('PMPTIME') &

, REPGROUP = @GETENV ('GGENVIRONMENT', 'GROUPNAME') &

, REPTIME = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

);

MAP ggmon.ggmon_heartbeat, TARGET ggmon.coordinated_heartbeat_history, THREAD (#thread) &

INSERTALLRECORDS &

, COLMAP (USEDEFAULTS &

, SOURCE_COMMIT = @GETENV ('GGHEADER', 'COMMITTIMESTAMP') &

, TARGET_COMMIT = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

, CAP_SOURCE_COMMIT = @TOKEN('CAP_SOURCE_COMMIT') &

, CAPGROUP = @TOKEN ('CAPGROUP') &

, CAPTIME = @TOKEN ('CAPTIME') &

, PMPGROUP = @TOKEN ('PMPGROUP') &

, PMPTIME = @TOKEN ('PMPTIME') &

, REPGROUP = @GETENV ('GGENVIRONMENT', 'GROUPNAME') &

, REPTIME = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

);

END;

$ cat gg_hb_integrated_replicat.mac

MACRO #gg_hb_integrated_replicat

BEGIN

MAP ggmon.ggmon_heartbeat, TARGET ggmon.integrated_heartbeat, &

COLMAP (USEDEFAULTS &

, SOURCE_COMMIT = @GETENV ('GGHEADER', 'COMMITTIMESTAMP') &

, TARGET_COMMIT = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

, CAP_SOURCE_COMMIT = @TOKEN('CAP_SOURCE_COMMIT') &

, CAPGROUP = @TOKEN ('CAPGROUP') &

, CAPTIME = @TOKEN ('CAPTIME') &

, PMPGROUP = @TOKEN ('PMPGROUP') &

, PMPTIME = @TOKEN ('PMPTIME') &

, REPGROUP = @GETENV ('GGENVIRONMENT', 'GROUPNAME') &

, REPTIME = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

);

MAP ggmon.ggmon_heartbeat, TARGET ggmon.integrated_heartbeat_history, &

INSERTALLRECORDS &

, COLMAP (USEDEFAULTS &

, SOURCE_COMMIT = @GETENV ('GGHEADER', 'COMMITTIMESTAMP') &

, TARGET_COMMIT = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

, CAP_SOURCE_COMMIT = @TOKEN('CAP_SOURCE_COMMIT') &

, CAPGROUP = @TOKEN ('CAPGROUP') &

, CAPTIME = @TOKEN ('CAPTIME') &

, PMPGROUP = @TOKEN ('PMPGROUP') &

, PMPTIME = @TOKEN ('PMPTIME') &

, REPGROUP = @GETENV ('GGENVIRONMENT', 'GROUPNAME') &

, REPTIME = @DATE ('YYYY-MM-DD HH:MI:SS.FFFFFF','JTS',@GETENV ('JULIANTIMESTAMP')) &

);

END;

B. Creating Extract and Replicats

Oracle 11.2.0.4 Database

DBLogin UserId c##ogg, Password ogg

MiningDBLogin UserId c##ogg, Password ogg

REGISTER EXTRACT cgga DATABASE

ADD SchemaTranData ggmon

ADD EXTRACT cgga, INTEGRATED TRANLOG, BEGIN NOW, DESC "***** Integrated Capture *****"

ADD EXTTRAIL ./dirdat/GA, EXTRACT cgga

ADD EXTRACT pgga, EXTTRAILSOURCE ./dirdat/GA, desc "***** Pump *****"

ADD RMTTRAIL ./dirdat/a1, EXTRACT pgga

ADD RMTTRAIL ./dirdat/a2, EXTRACT pgga

ADD RMTTRAIL ./dirdat/a3, EXTRACT pgga

GGSCI (db1.vm.com) 9> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

EXTRACT RUNNING CGGA 00:00:10 00:00:02

Description "***** Integrated Capture *****"

EXTRACT RUNNING PGGA 00:00:00 00:00:08

Description "***** Pump *****"

Oracle 12.1.0.1 Database

DBLogin UserId c##ogg, Password ogg

MiningDBLogin UserId c##ogg, Password ogg

DBLogin UserId c##ogg@pdbogg, Password ogg

-- Classic Replicat

ADD REPLICAT rgga1, ExtTrail ./dirdat/a1, checkpointtable pdbogg.c##ogg.checkpoint, desc "***** Classic Replicat *****"

-- Coordinated Replicat

ADD REPLICAT rgga2, Coordinated MaxThreads 2, ExtTrail ./dirdat/a2, checkpointtable pdbogg.c##ogg.checkpoint, desc "***** Coordinated Replicat *****"

-- Integrated Replicat

ADD REPLICAT rgga3, Integrated, ExtTrail ./dirdat/a3, desc "***** Integrated Replicat *****"

GGSCI (db1.vm.com) 8> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

REPLICAT RUNNING RGGA1 00:00:00 00:00:07

Description "***** Classic Replicat *****"

REPLICAT RUNNING RGGA2 00:00:00 00:00:07

Description "***** Coordinated Replicat *****"

REPLICAT RUNNING RGGA3 00:00:00 00:00:05

Description "***** Integrated Replicat *****"

C. Push Data Though via Heartbeat Table

After starting up the heartbeat table, we can query to see the data being replicated across each replicat type. The extract and replicat names can be seen in the output. Note that name of the coordinated replicat group has the three digit number appended to the original name.

Please keep in mind that this configuration was done on a small virtual machine, so the lag times are a bit high, but this blog was only about the functionality of the different replicat types.

GGMON:pdbogg> @all_rep_lag.sql

***********************************************************

Heartbeat

***********************************************************

REPLICAT CAP LAST CAP PMP REP TOTAL

TYPE DB NAME CAPGROUP PMPGROUP REPGROUP UPDATE LAG LAG LAG LAG

----------- -------- -------- -------- -------- -------------- ------ ------ ------ ------

Classic ORA11204 CGGA PGGA RGGA1 03/06 02:42:31 5.9 5.3 2.3 13.5

Coordinated ORA11204 CGGA PGGA RGGA2001 03/06 02:42:31 5.9 5.3 3.0 14.2

Integrated ORA11204 CGGA PGGA RGGA3 03/06 02:42:31 5.9 5.3 12.6 23.8

***********************************************************

Heartbeat History

***********************************************************

AVG AVG AVG AVG

REPLICAT CAP CAP PMP REP TOTAL

TYPE DB NAME CAPGROUP PMPGROUP REPGROUP LAG LAG LAG LAG

----------- -------- -------- -------- -------- ------ ------ ------ ------

Classic ORA11204 CGGA PGGA RGGA1 3.6 5.4 4.1 13.2

Coordinated ORA11204 CGGA PGGA RGGA2001 3.6 5.4 2.5 11.6

Integrated ORA11204 CGGA PGGA RGGA3 3.6 5.4 10.0 19.1