Oracle Lab – Exadata Simulation Setup

1.环境准备

1.1.软件包

Oracle Linux

For Cell Server:

Download from Oracle e-delivery

Oracle Linux Release 5 Update 10 for x86_64 (64 Bit) Name:V40139-01

For DB Server

Oracle Linux 7.4 UEK(Unbreakable Enterprise Kernel)

V921569-01-7.4.0.0.0-x86_64.iso

Exadata Storage Server:

Oracle Exadata Storage Server version: 11.2.3.2.1 for Linux x86-64

zip file name: V36290-01.zip

download from https://edelivery.oracle.com

Grid Infrustructure Software:

Grid version 12.2.0.1 (GRID Disk Group require 40GB)

Grid version 12.1.0.2 (used this one, require 5GB)

linuxx64_12201_grid_home.zip

Database Software:

Database Software 12.2.0.1

linuxx64_12201_database.zip

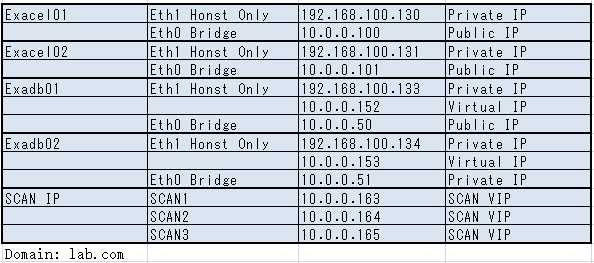

1.2.IP Address

Two Storage cell servers and two notes of RAC servers:

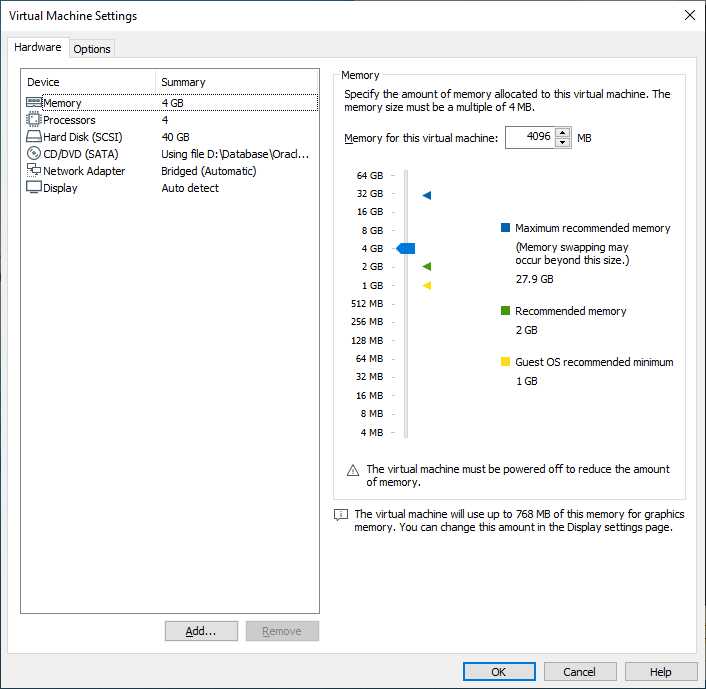

1.3.Hardware Configuration

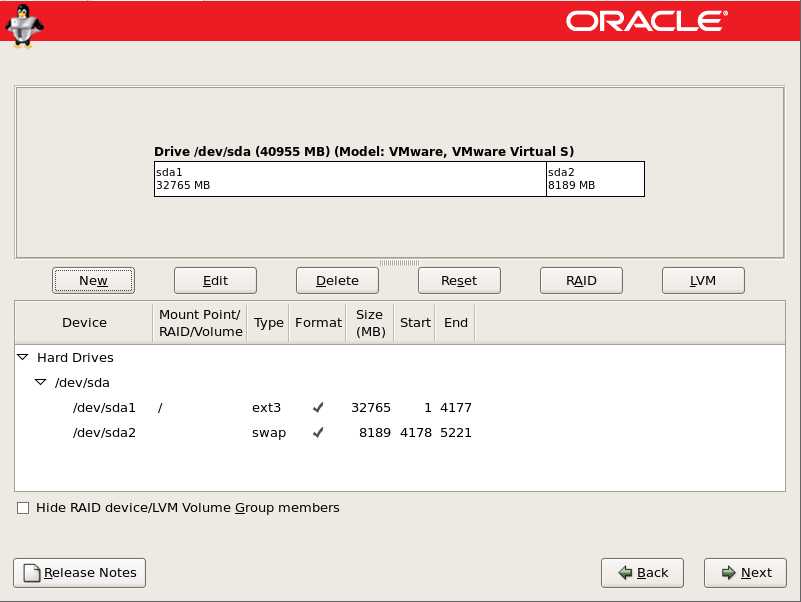

Cell Server:

Memory: 8GB

Disk Space: 40GB

SWAP Size: 8GB

CentOS suggests swap size to be:

– Twice the size of RAM if RAM is less than 2 GB

– Size of RAM + 2 GB if RAM size is more than 2 GB i.e. 5GB of swap for 3GB of RAM

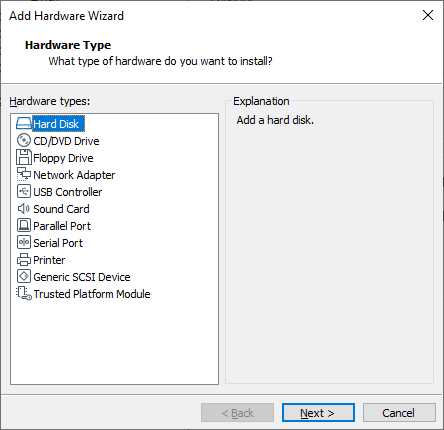

Storage Server 1: DATA, FLASH, GRID total 4GB:

exacel01_DATA01 5G GRID

exacel01_DATA02 5G DATA

exacel01_DATA03 5G DBFS

exacel01_DATA04 5G RECO(FRA)

exacel01_FLASH01 5G Cell Server Flashcache

exacel01_FLASH02 5G Cell Server Flashcache

Storage Server 2: the same as server 1

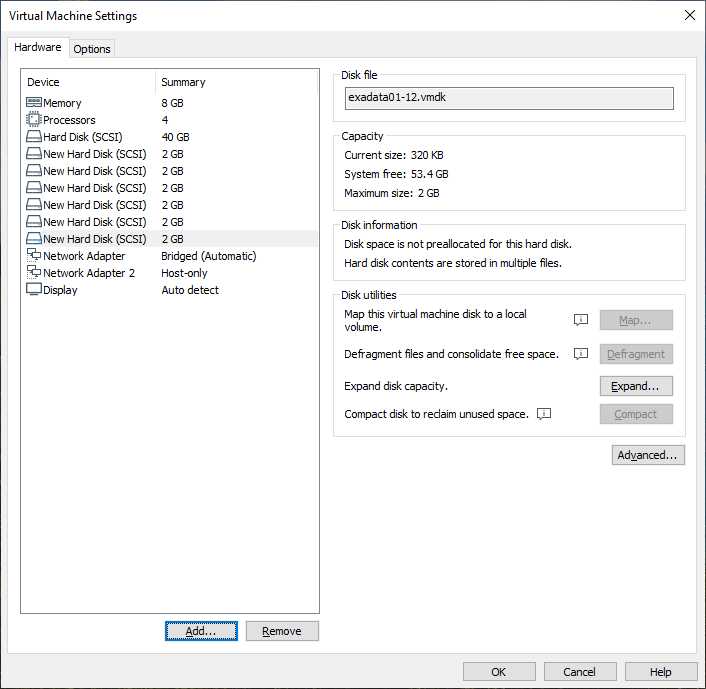

DB Server:

Memory: 8GB

Disk Space: 40GB

SWAP Size: 8GB

2.Storage Server Installation

2.1.分区

2.2关闭图形界面

systemctl set-default multi-user.target

vi /etc/inittab

id:5:initdefault:

改成

id:3:initdefault:

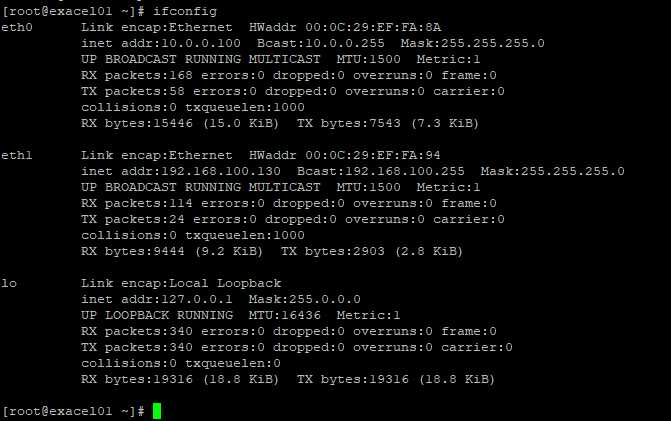

2.3.配置网卡

2.4关闭防火墙

/etc/init.d/iptables stop

chkconfig iptables off

2.5.设置SELinux

#vi /etc/selinux/config

SELINUX=disabled

2.6.安装FTP

yum -y install vsftpd

systemctl start vsftpd.service

systemctl enable vsftpd.service

service vsftpd start

chkconfig vsftpd on

2.7.hosts 文件

vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

#::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.100 exacel01 exacel01.lab.com

2.8.Hostname

vi /etc/sysconfig/network

NETWORKING=yes

NETWORKING_IPv6=no

HOSTNAME=exacel01

NOZEROCONF=yes #开机是否激活网络

2.9./etc/sysctl.conf

fs.file-max = 6815744

fs.aio-max-nr = 3145728

2.10./etc/security/limits.conf

* soft nofile 655360

* hard nofile 655360

2.11./etc/grub.conf

vi ./etc/grub.conf

default=1

rpmmacros?

2.12.FTP upload V36290-01.zip

FileZilla or WinSCP upload zip file.

upload V36290-01.zip into /stage

mkdir -p /var/log/oracle

chmod -R 775 /var/log/oracle

mkdir -p /stage

chmod -R 777 /stage

2.13.Install cell rpm

[root@exacel01 stage]# unzip V36290-01.zip

[root@exacel01 stage]# tar -xvf cellImageMaker_11.2.3.2.1_LINUX.X64_130109-1.x86_64.tar

[root@exacel01 stage]# ls -l /stage/dl180/boot/cellbits/cell.bin

-rwxrwxr-x 1 root root 245231205 Jan 9 2013 /stage/dl180/boot/cellbits/cell.bin

[root@exacel01 stage]# mkdir cellbin

[root@exacel01 stage]# cp -rv dl180/boot/cellbits/cell.bin cellbin

[root@exacel01 stage]# ls -l cellbin

total 239484

-rwxr-xr-x 1 root root 245231205 Apr 2 22:29 cell.bin

[root@exacel01 stage]# cd cellbin

[root@exacel01 cellbin]# unzip cell.bin

Archive: cell.bin

warning [cell.bin]: 6408 extra bytes at beginning or within zipfile

(attempting to process anyway)

inflating: cell-11.2.3.2.1_LINUX.X64_130109-1.x86_64.rpm

inflating: jdk-1_5_0_15-linux-amd64.rpm

[root@exadata01 cellbin]# rpm -ivh jdk-1_5_0_15-linux-amd64.rpm

Preparing... ########################################### [100%]

1:jdk ########################################### [100%]

[root@exacel01 cellbin]# rpm -ivh cell-11.2.3.2.1_LINUX.X64_130109-1.x86_64.rpm

Preparing... ########################################### [100%]

Pre Installation steps in progress ...

1:cell ########################################### [100%]

Post Installation steps in progress ...

Set cellusers group for /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/cellsrv/deploy/log directory

Set 775 permissions for /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/cellsrv/deploy/log directory

/

/

Installation SUCCESSFUL.

Starting RS and MS... as user celladmin

Done. Please Login as user celladmin and create cell to startup CELLSRV to complete cell configuration.

WARNING: Using the current shell as root to restart cell services.

Restart the cell services using a new shell.

Error: after restart machine, eth0 lost.

# ifup eth0

Device eth0 does not seem to be present, delaying initialisation

# service network restart

ringing up interface eth0: Device eth0 does not seem to be present, delaying initialization

Solution: Shutdown machine, change network adapter type to E1000.

3.Config Disks

3.1.配置虚拟机磁盘

3.2启动虚拟机

[root@exacel01 dev]# cd /dev

[root@exacel01 dev]# ls -l sd*

brw-r----- 1 root disk 8, 0 Apr 3 14:30 sda

brw-r----- 1 root disk 8, 1 Apr 3 14:31 sda1

brw-r----- 1 root disk 8, 2 Apr 3 14:30 sda2

brw-r----- 1 root disk 8, 16 Apr 3 14:30 sdb

brw-r----- 1 root disk 8, 32 Apr 3 14:30 sdc

brw-r----- 1 root disk 8, 48 Apr 3 14:30 sdd

brw-r----- 1 root disk 8, 64 Apr 3 14:30 sde

brw-r----- 1 root disk 8, 80 Apr 3 14:30 sdf

brw-r----- 1 root disk 8, 96 Apr 3 14:30 sdg

3.3.链接raw Disks

[root@exacel01 dev]# fdisk -l 2>/dev/null |grep 'B,'

Disk /dev/sda: 42.9 GB, 42949672960 bytes

Disk /dev/sdb: 2147 MB, 2147483648 bytes

Disk /dev/sdc: 2147 MB, 2147483648 bytes

Disk /dev/sdd: 2147 MB, 2147483648 bytes

Disk /dev/sde: 2147 MB, 2147483648 bytes

Disk /dev/sdf: 2147 MB, 2147483648 bytes

Disk /dev/sdg: 2147 MB, 2147483648 bytes

mkdir -p /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw

cd /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw

ln -s /dev/sdb exacel01_DATA01

ln -s /dev/sdc exacel01_DATA02

ln -s /dev/sdd exacel01_DISK03

ln -s /dev/sde exacel01_DISK04

ln -s /dev/sdf exacel01_FLASH01

ln -s /dev/sdg exacel01_FLASH02

[root@exacel01 raw]# ls -l

total 0

lrwxrwxrwx 1 root root 8 Apr 3 18:08 exacel01_DATA01 -> /dev/sdb

lrwxrwxrwx 1 root root 8 Apr 3 18:08 exacel01_DATA02 -> /dev/sdc

lrwxrwxrwx 1 root root 8 Apr 3 18:08 exacel01_DISK03 -> /dev/sdd

lrwxrwxrwx 1 root root 8 Apr 3 18:08 exacel01_DISK04 -> /dev/sde

lrwxrwxrwx 1 root root 8 Apr 3 18:08 exacel01_FLASH01 -> /dev/sdf

lrwxrwxrwx 1 root root 8 Apr 3 18:08 exacel01_FLASH02 -> /dev/sdg

cd

cellcli -e list physicaldisk

[root@exacel01 ~]# cellcli -e list physicaldisk

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DATA01 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DATA01 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DATA02 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DATA02 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DISK03 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DISK03 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DISK04 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DISK04 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH01 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH01 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH02 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH02 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_GRID01 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_GRID01 not present

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_GRID02 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_GRID02 not present

[root@exacel01 ~]# cellcli -e list lun

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DATA01 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DATA01 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DATA02 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DATA02 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DISK03 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DISK03 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DISK04 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_DISK04 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH01 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH01 normal

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH02 /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH02 normal

4.Create Cell Server

4.1.Load rds

Linux modprobe命令用于自动处理可载入模块。

modprobe可载入指定的个别模块,或是载入一组相依的模块。modprobe会根据depmod所产生的相依关系,决定要载入哪些模块。若在载入过程中发生错误,在modprobe会卸载整组的模块。

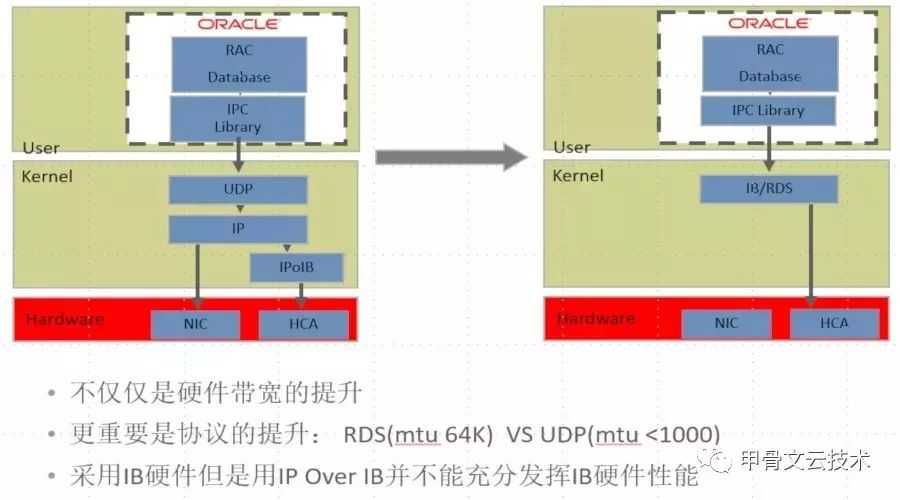

Exadata的RDS协议是用来集群间传递数据块的。Oracle在RAC互联上采用了专门为Infiniband而研发的RDS(Reliable Datagram Sockets)协议。

如上图所示,采用了RDS大大减少了不同节点实例进行Cache Fusion通信的协议栈层次,而且可以直接利用Inifiniband本身lossless特性。通过更高的MTU大大降低了进行Cache Fusion缓存一致性通信时传输Oracle数据块需要的通信次数。

RDS协议理论上在任何X86平台使用Infiniband硬件都能运行,但是RDS协议是对操作系统、硬件微码等都有较强依赖的协议。在非Exadata环境,我们看到很多运行Oracle RAC的硬件平台会使用Infiniband硬件,但是在生产中却不敢启用RDS,仍然使用UDP over IP over IB的方式,因此不能充分发挥Infiniband硬件互联的能力。

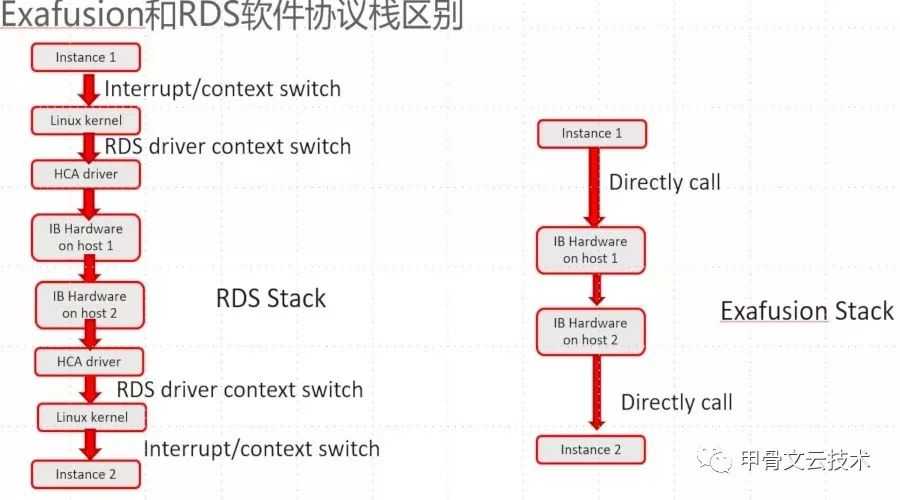

RDS虽然已经比UDP over IP over IB强了很多,但是仅仅做到这点仍然是不够的。采用RDS的通信方式,对于数据库实例来说,仍然是一种需要中断调用的方式,需要用户态到内核态的系统调用。大家都知道中断调用带来的上下文切换开销是非常大的,会严重增加延迟,因此需要一种更好更新的方式。在Oracle Exadata系统软件的12.1.2.1.0版本,引入了新的进行RAC互联的通信协议Exafusion Direct-to-Wire Protocol。这是只有在Exadata平台才能启用的特性。对数据库版本也有一定要求,需要12.1.0.2.13以上版本。在12.1.0.2这个大版本中,缺省是关闭的,需要在spfile中设置exafusion_enabled=true来启用这一特性。从12.2开始,这个参数在Exadata平台就是自动启用的,将采用这种新的RAC互联通信协议,官方也推荐在12.2或者更高版本使用它。下图是Exafusion和RDS在跨节点通信时的调用过程。

从图中可以看出,Exafusion Direct-to-Wire Protocol这种无中断调用的通信协议,大大加快了跨节点缓存同步的效率,真正利用到RDMA(远程内存直接访问),无需上下文切换。通过这一系列软件层面的增强,才让Exadata成为了运行Oracle数据库的较佳平台,也让15年前Oracle推出RAC架构愿景能成为了现实:在高并发高容量的生产环境中,数据库节点数量对应用透明,应用只需要连接RAC的SCAN即可自动实现负载均衡而不用关心应用分割,从而成为一个对应用透明的、横向可扩展的、基于缓存一致性的分布式数据库环境。

modprobe rds

modprobe rds_tcp

modprobe rds_rdma

vi /etc/modprobe.d/rds.conf

install rds /sbin/modprobe –-ignore-install rds && /sbin/modprobe rds_tcp && /sbin/modprobe rds_rdma

4.2.Tail Alert Log

alert log:

tail -f /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/log/diag/asm/cell/exacel01/trace/alert.log

4.3.Create cell

Create cell:

[root@exacel01 modprobe.d]# cellcli -e create cell exacel01 interconnect1=eth0

Cell exacel01 successfully created

Starting CELLSRV services...

The STARTUP of CELLSRV services was successful.

Flash cell disks, FlashCache, and FlashLog will be created...

CellDisk FD_00_exacel01 successfully created

CellDisk FD_01_exacel01 successfully created

Flash log exacel01_FLASHLOG successfully created

Flash cache exacel01_FLASHCACHE successfully created

***error***

CELL-02664: Failed to create FLASHCACHE on cell disk FD_01_exacel01. Received error: CELL-02559: There is a communication error between MS and CELLSRV.

[root@exacel01 modprobe.d]#

Fixed this problem by increase memery from 6GB to 8GB.

Reference: command to drop cell server:

cellcli -e drop cell exacel01 force

T_WORK

[root@exacel01 ~]# echo $T_WORK

/opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks

4.4.Start or Shutdown Services

To check services status:

[root@exacel02 ~]# service celld status

rsStatus: running

msStatus: running

cellsrvStatus: running

To Startup or Shutdown all services:

CellCLI> alter cell startup services all

CellCLI> alter cell shutdown services all

To restart services:

cellcli -e alter cell restart services all

or

CellCLI> alter cell restart services all

To shutdown CELLSRV service:

[root@exacel01 modprobe.d]# cellcli

CellCLI: Release 11.2.3.2.1 - Production on Sat Apr 03 15:41:24 MDT 2021

Copyright (c) 2007, 2012, Oracle. All rights reserved.

Cell Efficiency Ratio: 1

CellCLI> alter cell shutdown services CELLSRV

Stopping CELLSRV services...

The SHUTDOWN of CELLSRV services was successful.

4.5.Check cell detail

To check cell detail:

CellCLI> list cell detail

name: exacel01

bbuTempThreshold: 60

bbuChargeThreshold: 800

bmcType: absent

cellVersion: OSS_11.2.3.2.1_LINUX.X64_130109

cpuCount: 4

diagHistoryDays: 7

fanCount: 1/1

fanStatus: normal

flashCacheMode: WriteThrough

id: afc746ac-a3c7-460e-98fd-be0fa2ecc4ac

interconnectCount: 2

interconnect1: eth0

iormBoost: 0.0

ipaddress1: 10.0.0.100/24

kernelVersion: 2.6.18-371.el5

makeModel: Fake hardware

metricHistoryDays: 7

offloadEfficiency: 1.0

powerCount: 1/1

powerStatus: normal

releaseVersion: 11.2.3.2.1

releaseTrackingBug: 14522699

status: online

temperatureReading: 0.0

temperatureStatus: normal

upTime: 0 days, 0:15

cellsrvStatus: running

msStatus: running

rsStatus: running

Note: Oracle marked the installation as “makeModel: Fake hardware”.

However, cell server is up and running

4.6.list flashcache detail

CellCLI> list flashcache detail

name: exacel01_FLASHCACHE

cellDisk:

creationTime: 2021-04-03T16:06:12-06:00

degradedCelldisks: FD_00_exacel01

effectiveCacheSize: 0

id: d70f8772-577f-4877-8d0c-a6014ab8dc5a

size: 720M

status: critical

Command: recreate flashcache:

drop flashcache

drop celldisk all flashdisk force

create celldisk all flashdisk

create flashcache

4.7.list flashlog detail

CellCLI> list flashlog detail

name: exacel01_FLASHLOG

cellDisk: FD_01_exacel01,FD_00_exacel01

creationTime: 2021-04-03T15:42:28-06:00

degradedCelldisks:

effectiveSize: 512M

efficiency: 100.0

id: 633f4ece-ca6b-4a92-830e-f9c37db17584

size: 512M

status: normal

4.8.list celldisk detail

CellCLI> list celldisk detail

name: FD_00_exacel01

comment:

creationTime: 2021-04-03T18:10:48-06:00

deviceName: /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH01

devicePartition: /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH01

diskType: FlashDisk

errorCount: 0

freeSpace: 0

id: 9070c51e-3c39-4857-993e-563e4e2273cf

interleaving: none

lun: /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH01

physicalDisk: /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH01

raidLevel: "RAID 0"

size: 2G

status: normal

name: FD_01_exacel01

comment:

creationTime: 2021-04-03T18:10:48-06:00

deviceName: /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH02

devicePartition: /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH02

diskType: FlashDisk

errorCount: 0

freeSpace: 0

id: bafb40ae-20be-405f-8f9c-7c54280d1103

interleaving: none

lun: /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH02

physicalDisk: /opt/oracle/cell11.2.3.2.1_LINUX.X64_130109/disks/raw/exacel01_FLASH02

raidLevel: "RAID 0"

size: 2G

status: normal

4.9.create griddisk

CellCLI> create celldisk all

CellDisk CD_DATA01_exacel01 successfully created

CellDisk CD_DATA02_exacel01 successfully created

CellDisk CD_DISK03_exacel01 successfully created

CellDisk CD_DISK04_exacel01 successfully created

exacel01:

create griddisk GI_exacel01_DISK01 celldisk=CD_DATA01_exacel01,size=2.5G

create griddisk GI_exacel01_DISK02 celldisk=CD_DATA01_exacel01

create griddisk DATA_exacel01_DISK01 celldisk=CD_DATA02_exacel01,size=2.5G

create griddisk DATA_exacel01_DISK02 celldisk=CD_DATA02_exacel01

create griddisk DBFS_exacel01_DISK01 celldisk=CD_DISK03_exacel01,size=2.5G

create griddisk DBFS_exacel01_DISK02 celldisk=CD_DISK03_exacel01

create griddisk RECO_exacel01_DISK01 celldisk=CD_DISK04_exacel01,size=2.5G

create griddisk RECO_exacel01_DISK02 celldisk=CD_DISK04_exacel01

CellCLI> list griddisk

DATA_exacel01_DISK01 active

DATA_exacel01_DISK02 active

DBFS_exacel01_DISK01 active

DBFS_exacel01_DISK02 active

GI_exacel01_DISK01 active

GI_exacel01_DISK02 active

RECO_exacel01_DISK01 active

RECO_exacel01_DISK02 active

exacel02:

create griddisk GI_exacel02_DISK01 celldisk=CD_DATA01_exacel02,size=2.5G

create griddisk GI_exacel02_DISK02 celldisk=CD_DATA01_exacel02

create griddisk DATA_exacel02_DISK01 celldisk=CD_DATA02_exacel02,size=2.5G

create griddisk DATA_exacel02_DISK02 celldisk=CD_DATA02_exacel02

create griddisk DBFS_exacel02_DISK01 celldisk=CD_DISK03_exacel02,size=2.5G

create griddisk DBFS_exacel02_DISK02 celldisk=CD_DISK03_exacel02

create griddisk RECO_exacel02_DISK01 celldisk=CD_DISK04_exacel02,size=2.5G

create griddisk RECO_exacel02_DISK02 celldisk=CD_DISK04_exacel02

CellCLI> list griddisk

DATA_exacel02_DISK01 active

DATA_exacel02_DISK02 active

DBFS_exacel02_DISK01 active

DBFS_exacel02_DISK02 active

GI_exacel02_DISK01 active

GI_exacel02_DISK02 active

RECO_exacel02_DISK01 active

RECO_exacel02_DISK02 active

4.10.Reference Cellcli

alter commands:

CellCLI> alter cell shutdown services rs --> To shutdown the Restart Server service

CellCLI> alter cell shutdown services MS --> To shutdown the Management Server service

CellCLI> alter cell shutdown services CELLSRV --> To shutdown the Cell Services

CellCLI> alter cell shutdown services all -->To shutdown the RS, CELLSRV and MS services

CellCLI> alter cell restart services rs

CellCLI> alter cell restart services all

CellCLI> alter cell led on

CellCLI> alter cell led off

CellCLI> alter cell validate mail

CellCLI> alter cell validate configuration

CellCLI> alter cell smtpfromaddr='cell07@orac.com'

CellCLI> alter cell smtpfrom='Exadata Cell 07'

CellCLI> alter cell smtptoaddr='satya@orac.com'

CellCLI> alter cell emailFormat='text'

CellCLI> alter cell emailFormat='html'

CellCLI> alter cell validate snmp type=ASR - Automatic Service Requests (ASRs)

CellCLI> alter cell snmpsubscriber=((host='snmp01.orac.com,type=ASR'))

CellCLI> alter cell restart bmc - BMC, Baseboard Management Controller, controls the compoments of the cell.

CellCLI> alter cell configure bmc

CellCLI> alter physicaldisk 34:2,34:3 serviceled on

CellCLI> alter physicaldisk 34:6,34:9 serviceled off

CellCLI> alter physicaldisk harddisk serviceled on

CellCLI> alter physicaldisk all serviceled on

CellCLI> alter lun 0_10 reenable

CellCLI> alter lun 0_04 reenable force

CellCLI> alter celldisk FD_01_cell07 comment='Flash Disk'

CellCLI> alter celldisk all harddisk comment='Hard Disk'

CellCLI> alter celldisk all flashdisk comment='Flash Disk'

CellCLI> alter griddisk RECO_CD_10_cell06 comment='Used for Reco'

CellCLI> alter griddisk all inactive

CellCLI> alter griddisk RECO_CD_11_cell12 inactive

CellCLI> alter griddisk RECO_CD_08_cell01 inactive force

CellCLI> alter griddisk RECO_CD_11_cell01 inactive nowait

CellCLI> alter griddisk DATA_CD_00_CELL01,DATA_CD_02_CELL01,...DATA_CD_11_CELL01 inactive

CellCLI> alter griddisk all active

CellCLI> alter griddisk RECO_CD_11_cell01 active

CellCLI> alter griddisk all harddisk comment='Hard Disk'

CellCLI> alter ibport ibp2 reset counters

CellCLI> alter iormplan active

CellCLI> alter quarantine

CellCLI> alter threshold DB_IO_RQ_SM_SEC.PRODB comparison=">", critical=100

CellCLI> alter alerthistory

5.DB Server Virtual Machine Installation

5.1.VMware Machine Configuration

select “server with GUI”

分区:

- Swap 6GB

- / 34GB

5.2.oracle 和 grid 用户添加sudo权限

chmod u+w /etc/sudoers

vi /etc/sudoers

oracle ALL=(ALL) NOPASSWD:ALL

grid ALL=(ALL) NOPASSWD:ALL

chmod u-w /etc/sudoers

5.3.关闭图形界面

systemctl set-default multi-user.target

5.4.网卡配置

exadb01:

ens32: bridge IP Address:10.0.0.50

ens33: hostonly IP Address:192.168.100.133

esadb02:

ens32: bridge IP Address:10.0.0.51

ens33: hostonly IP Address:192.168.100.134

5.5.关闭防火墙

systemctl disable firewalld

systemctl status firewalld

5.6.设置SELinux

vi /etc/selinux/config

SELINUX=disabled

reboot

5.7.删除virbr0虚拟网卡

ifconfig virbr0 down

brctl delbr virbr0

systemctl disable libvirtd.service

systemctl mask libvirtd.service

5.8.关闭avahi-daemon进程

systemctl stop avahi-daemon

systemctl disable avahi-daemon

systemctl list-unit-files|grep avah

5.9.安装FTP

yum -y install vsftpd

systemctl start vsftpd.service

systemctl enable vsftpd.service

5.10.主机名

/etc/sysconfig/network

NETWORKING=yes

NETWORKING_IPv6=no

HOSTNAME=exadb01

NOZEROCONF=yes #开机是否激活网络

5.10.hosts 文件

vi /etc/hosts

#Public IP

10.0.0.50 exadb01.lab.com exadb01

10.0.0.51 exadb02.lab.com exadb02

$Virtual IP

10.0.0.152 exadb01-vip.lab.com exadb01-vip

10.0.0.153 exadb02-vip.lab.com exadb02-vip

#Private IP

192.168.100.133 exadb01-priv.lab.com exadb01-priv

192.168.100.134 exadb02-priv.lab.com exadb02-priv

#Storage Cell Servers

10.0.0.100 exacel01.lab.com exacel01

10.0.0.101 exacel02.lab.com exacel02

#Scan IP

10.0.0.163 exa-scan

10.0.0.164 exa-scan

10.0.0.165 exa-scan

Very Configuration:

nslookup exadb01-vip

nslookup exadb02-vip

nslookup exa-scan

6.DB Server Grid软件安装准备

6.1.安装RPM包

yum install compat-libstdc++-33.i686 -y

yum install compat-libstdc++-33.x86_64 -y

yum install compat-libcap1.i686 -y

yum install compat-libcap1.x86_64 -y

yum install gcc.x86_64 -y

yum install gcc-c++.x86_64 -y

yum install ksh.x86_64 -y

yum install libaio-devel.i686 -y

yum install libaio-devel.x86_64 -y

yum install libstdc++-devel.i686 -y

yum install libstdc++-devel.x86_64 -y

yum install unixODBC-devel.i686 -y

yum install unixODBC-devel.x86_64 -y

yum install unixODBC.i686 -y

yum install unixODBC.x86_64 -y

4.2.安装rlwrap 工具

rpm -qa | grep readline

yum search readline

yum -y install readline-devel

tar zxvf rlwrap-0.43.tar.gz

cd rlwrap-0.43

./configure

make

make install

4.3.用户,组,安装目录

groupadd oinstall

groupadd dba

groupadd oper

groupadd asmadmin

groupadd asmdba

groupadd asmoper

useradd -g oinstall -G dba,asmadmin,asmdba,asmoper grid

usermod -g oinstall -G dba,oper,asmdba oracle

passwd grid

4.4.Linux内核参数

vi /etc/sysctl.conf

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

fs.aio-max-nr = 1048576

fs.file-max = 6815744

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

sysctl -p

4.5./etc/security/limits.conf

vi /etc/security/limits.conf

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

grid hard stack 32768

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

4.6./etc/profile

vi /etc/profile

if [ #USER = "oracle" ] || [ #USER = "grid" ] ; then

if [ $SHELL = "/bin/ksh" ] ; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

4.7.Prepare directory

mkdir -p /u01/app/grid/12.2.0.1/grid

mkdir -p /u02/app/oracle/product/12.2.0.1/db

chown -R grid:oinstall /u01

chown -R oracle:oinstall /u02

chmod -R 755 /u01

chmod -R 755 /u02

4.8./etc/pam.d/login

In traditional Unix authentication there is not much granularity available in limiting a user’s ability to log in. For example, how would you limit the hosts that users can come from when logging into your servers? Your first thought might be to set up TCP wrappers or possibly firewall rules. But what if you wanted to allow some users to log in from a specific host, but disallow others from logging in from it? Or what if you wanted to prevent some users from logging in at certain times of the day because of daily maintenance, but allow others (i.e., administrators) to log in at any time they wish? To get this working with every service that might be running on your system, you would traditionally have to patch each of them to support this new functionality. This is where PAM enters the picture.

PAM, or pluggable authentication modules, allows for just this sort of functionality (and more) without the need to patch all of your services. PAM has been available for quite some time under Linux, FreeBSD, and Solaris, and is now a standard component of the traditional authentication facilities on these platforms. Many services that need to use some sort of authentication now support PAM.

session required pam_limits.so

4.9.stop ntpd

rpm -q ntp

systemctl stop chronyd

systemctl disable chronyd

systemctl status chronyd

4.10.grid用户 profile

登录grid(exadb01的 ORACLE_SID=+ASM1, exadb02的是+ASM2))

vi .bash_profile

export ORACLE_SID=+ASM1

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/grid/12.2.0.1/grid

export GRID_HOME=/u01/app/grid/12.2.0.1/grid

export TNS_ADMIN=#GRID_HOME/network/admin

export LD_LIBRARY_PATH=#ORACLE_HOME/lib:#LD_LIBRARY_PATH

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export PATH=#ORACLE_HOME/bin:#PATH

export DISPLAY=localhost:10.0

export PS1='[\u@\h:`pwd`]$'

alias sqlplus='rlwrap sqlplus'

alias rman='rlwrap rman'

4.11.oracle用户 profile

登录oracle (exadb01的 ORACLE_SID= RABBIT1, exadb02的是+ RABBIT2))

vi .bash_profile

export ORACLE_SID=RABBIT1

export ORACLE_UNQNAME=RABBIT

export ORACLE_BASE=/u02/app/oracle

export ORACLE_HOME=/u02/app/oracle/product/12.2.0.1/db

export GRID_HOME=/u01/app/grid/12.2.0.1/grid

export TNS_ADMIN=#GRID_HOME/network/admin

export LD_LIBRARY_PATH=#ORACLE_HOME/lib:#LD_LIBRARY_PATH

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export PATH=#ORACLE_HOME/bin:#PATH

export DISPLAY=localhost:10.0

export PS1='[\u@\h:`pwd`]$'

alias sqlplus='rlwrap sqlplus'

alias rman='rlwrap rman'

4.12.解压缩grid安装包

Login to grid user, FTP upload linuxx64_12201_grid_home.zip

cd ~

unzip -d $ORACLE_HOME linuxx64_12201_grid_home.zip

4.13.cellinit.ora

note: exadb02 ipaddress1=10.0.0.51/24

su

mkdir -p /etc/oracle/cell/network-config/

cd /etc/oracle/cell/network-config/

vi cellinit.ora

ipaddress1=10.0.0.50/24

_cell_print_all_params=true

_skgxp_gen_rpc_timeout_in_sec=90

_skgxp_gen_ant_off_rpc_timeout_in_sec=300

_skgxp_udp_interface_detection_time_secs=15

_skgxp_udp_use_tcb_client=true

_skgxp_udp_use_tcb=false

_reconnect_to_cell_attempts=4

4.14.cellip.ora

su

cd /etc/oracle/cell/network-config

vi cellip.ora

cell="10.0.0.100"

cell="10.0.0.101"

4.15.Kfod discover cell disk

cd /home/grid/grid/rpm

rpm -ivh cvuqdisk-1.0.7-1.rpm

cd /home/grid/grid/stage/ext/bin

export LD_LIBRARY_PATH=/home/grid/grid/stage/ext/lib

[grid@exadb01:/home/grid/grid/stage/ext/bin]$./kfod disks=all op=disks

--------------------------------------------------------------------------------

Disk Size Path User Group

================================================================================

1: 1024 MB o/10.0.0.100/DATA_exacel01_DISK01

2: 976 MB o/10.0.0.100/DATA_exacel01_DISK02

3: 1024 MB o/10.0.0.100/DBFS_exacel01_DISK01

4: 976 MB o/10.0.0.100/DBFS_exacel01_DISK02

5: 1024 MB o/10.0.0.100/GI_exacel01_DISK01

6: 976 MB o/10.0.0.100/GI_exacel01_DISK02

7: 1024 MB o/10.0.0.100/RECO_exacel01_DISK01

8: 976 MB o/10.0.0.100/RECO_exacel01_DISK02

9: 1024 MB o/10.0.0.101/DATA_exacel02_DISK01

10: 976 MB o/10.0.0.101/DATA_exacel02_DISK02

11: 1024 MB o/10.0.0.101/DBFS_exacel02_DISK01

12: 976 MB o/10.0.0.101/DBFS_exacel02_DISK02

13: 1024 MB o/10.0.0.101/GI_exacel02_DISK01

14: 976 MB o/10.0.0.101/GI_exacel02_DISK02

15: 1024 MB o/10.0.0.101/RECO_exacel02_DISK01

16: 976 MB o/10.0.0.101/RECO_exacel02_DISK02

4.16.安装exadb02

重复以上步骤安装exadb02.

4.17.配置SSH

运行 sshUserSetup.sh 配置SSH

exadb01:

grid用户:

su – grid

cd $ORACLE_HOME/deinstall

./sshUserSetup.sh -user grid -hosts "exadb01 exadb02 exadb01-priv exadb02-priv" -advanced –noPromptPassphrase

oracle用户:

su - oracle

cd $ORACLE_HOME/deinstall

./sshUserSetup.sh -user oracle -hosts "exadb01 exadb02 exadb01-priv exadb02-priv" -advanced –noPromptPassphrase

exadb02:

在 exadb01 重复以上步骤.

测试

exadb01:

ssh exadb02 date

ssh exadb02-priv date

exadb02:

ssh exadb01 date

ssh exadb02-priv date

4.18.运行runcluvfy.sh

cd $GRID_HOME/cv/rpm

su

rpm -ivh cvuqdisk-1.0.10-1.rpm

su - grid

#GRID_HOME/runcluvfy.sh stage -pre crsinst -n exadb01,exadb02 -verbose

忽略NTP错误提示。

忽略swap size错误提示。

5.安装Grid软件

5.1.启动安装程序

$ORACLE_HOME/gridSetup.sh

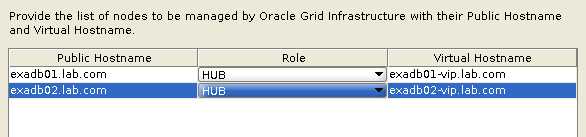

5.2.Installation

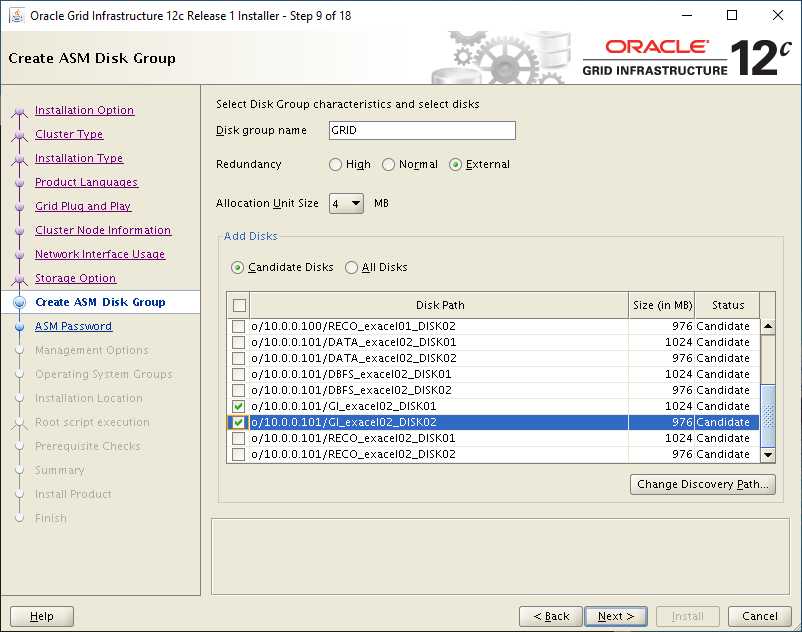

Configure Oracle Grid Infrastructure for a New Cluster

Configure an Oracle Standalone Cluster

Cluster Name: exadb

SCAN Name: exa-scan

SCAN Port: 1522

Configure ASM using block device

Grid Infrastructure Management Repository (GIMR) No

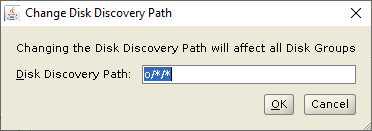

select 4 GI disks on 2 cell servers:

su - grid

cd ORACLE_HOME/deinstall

./deinstall

[grid@exadb01:/u01/app/grid/12.2.0.1/grid/deinstall]./deinstall

Checking for required files and bootstrapping ...

Please wait ...

Location of logs /tmp/deinstall2021-04-10_06-01-55PM/logs/

############ ORACLE DECONFIG TOOL START ############

######################### DECONFIG CHECK OPERATION START #########################

## [START] Install check configuration ##

Deinstall utility is unable to determine the list of nodes, on which this home is configured, as the Oracle Clusterware processes are not running on the local node.

Is this home configured on other nodes (y - yes, n - no)?[n]:y

Specify a comma-separated list of remote nodes to cleanup : exadb02

Checking for existence of the Oracle home location /u01/app/grid/12.2.0.1/grid

Oracle Home type selected for deinstall is: Oracle Grid Infrastructure for a Cluster

Oracle Base selected for deinstall is: /u01/app/oracle

Checking for existence of central inventory location /u01/app/oraInventory

Checking for existence of the Oracle Grid Infrastructure home /u01/app/grid/12.2.0.1/grid

The following nodes are part of this cluster: exadb01,exadb02

Active Remote Nodes are exadb02

Checking for sufficient temp space availability on node(s) : 'exadb01,exadb02'

## [END] Install check configuration ##

Traces log file: /tmp/deinstall2021-04-10_06-01-55PM/logs//crsdc_2021-04-10_06-03-12PM.log

Network Configuration check config START

Network de-configuration trace file location: /tmp/deinstall2021-04-10_06-01-55PM/logs/netdc_check2021-04-10_06-03-12-PM.log

Specify all RAC listeners (do not include SCAN listener) that are to be de-configured. Enter .(dot) to deselect all. [LISTENER]:

Network Configuration check config END

Asm Check Configuration START

ASM de-configuration trace file location: /tmp/deinstall2021-04-10_06-01-55PM/logs/asmcadc_check2021-04-10_06-03-41-PM.log

ASM configuration was not detected in this Oracle home. Was ASM configured in this Oracle home (y|n) [n]: n

ASM was not detected in the Oracle Home

Database Check Configuration START

Database de-configuration trace file location: /tmp/deinstall2021-04-10_06-01-55PM/logs/databasedc_check2021-04-10_06-04-00-PM.log

Oracle Grid Management database was not found in this Grid Infrastructure home

Database Check Configuration END

######################### DECONFIG CHECK OPERATION END #########################

####################### DECONFIG CHECK OPERATION SUMMARY #######################

Oracle Grid Infrastructure Home is: /u01/app/grid/12.2.0.1/grid

The following nodes are part of this cluster: exadb01,exadb02

Active Remote Nodes are exadb02

The cluster node(s) on which the Oracle home deinstallation will be performed are:exadb01,exadb02

Oracle Home selected for deinstall is: /u01/app/grid/12.2.0.1/grid

Inventory Location where the Oracle home registered is: /u01/app/oraInventory

Following RAC listener(s) will be de-configured: LISTENER

ASM was not detected in the Oracle Home

Oracle Grid Management database was not found in this Grid Infrastructure home

Do you want to continue (y - yes, n - no)? [n]: y

A log of this session will be written to: '/tmp/deinstall2021-04-10_06-01-55PM/logs/deinstall_deconfig2021-04-10_06-02-45-PM.out'

Any error messages from this session will be written to: '/tmp/deinstall2021-04-10_06-01-55PM/logs/deinstall_deconfig2021-04-10_06-02-45-PM.err'

######################## DECONFIG CLEAN OPERATION START ########################

Database de-configuration trace file location: /tmp/deinstall2021-04-10_06-01-55PM/logs/databasedc_clean2021-04-10_06-04-29-PM.log

ASM de-configuration trace file location: /tmp/deinstall2021-04-10_06-01-55PM/logs/asmcadc_clean2021-04-10_06-04-29-PM.log

ASM Clean Configuration END

Network Configuration clean config START

Network de-configuration trace file location: /tmp/deinstall2021-04-10_06-01-55PM/logs/netdc_clean2021-04-10_06-04-29-PM.log

De-configuring RAC listener(s): LISTENER

De-configuring listener: LISTENER

Stopping listener: LISTENER

Listener stopped successfully.

Listener de-configured successfully.

De-configuring Naming Methods configuration file on all nodes...

Naming Methods configuration file de-configured successfully.

De-configuring Local Net Service Names configuration file on all nodes...

Local Net Service Names configuration file de-configured successfully.

De-configuring Directory Usage configuration file on all nodes...

Directory Usage configuration file de-configured successfully.

De-configuring backup files on all nodes...

Backup files de-configured successfully.

The network configuration has been cleaned up successfully.

Network Configuration clean config END

---------------------------------------->

The deconfig command below can be executed in parallel on all the remote nodes. Execute the command on the local node after the execution completes on all the remote nodes.

Run the following command as the root user or the administrator on node "exadb02".

/tmp/deinstall2021-04-10_06-01-55PM/perl/bin/perl -I/tmp/deinstall2021-04-10_06-01-55PM/perl/lib -I/tmp/deinstall2021-04-10_06-01-55PM/crs/install /tmp/deinstall2021-04-10_06-01-55PM/crs/install/rootcrs.pl -force -deconfig -paramfile "/tmp/deinstall2021-04-10_06-01-55PM/response/deinstall_OraGI12Home1.rsp"

Run the following command as the root user or the administrator on node "exadb01".

/tmp/deinstall2021-04-10_06-01-55PM/perl/bin/perl -I/tmp/deinstall2021-04-10_06-01-55PM/perl/lib -I/tmp/deinstall2021-04-10_06-01-55PM/crs/install /tmp/deinstall2021-04-10_06-01-55PM/crs/install/rootcrs.pl -force -deconfig -paramfile "/tmp/deinstall2021-04-10_06-01-55PM/response/deinstall_OraGI12Home1.rsp" -lastnode

Press Enter after you finish running the above commands

<----------------------------------------

[root@exadb02:/u01/app/oracle/crsdata/exadb02/crsconfig]$/tmp/deinstall2021-04-10_06-01-55PM/perl/bin/perl -I/tmp/deinstall2021-04-10_06-01-55PM/perl/lib -I/tmp/deinstall2021-04-10_06-01-55PM/crs/install /tmp/deinstall2021-04-10_06-01-55PM/crs/install/rootcrs.pl -force -deconfig -paramfile "/tmp/deinstall2021-04-10_06-01-55PM/response/deinstall_OraGI12Home1.rsp"

Using configuration parameter file: /tmp/deinstall2021-04-10_06-01-55PM/response/deinstall_OraGI12Home1.rsp

PRCR-1070 : Failed to check if resource ora.net1.network is registered

CRS-0184 : Cannot communicate with the CRS daemon.

PRCR-1070 : Failed to check if resource ora.helper is registered

CRS-0184 : Cannot communicate with the CRS daemon.

PRCR-1070 : Failed to check if resource ora.ons is registered

CRS-0184 : Cannot communicate with the CRS daemon.

2021/04/10 18:07:21 CLSRSC-4006: Removing Oracle Trace File Analyzer (TFA) Collector.

2021/04/10 18:09:02 CLSRSC-4007: Successfully removed Oracle Trace File Analyzer (TFA) Collector.

Failure in execution (rc=-1, 0, 2) for command /etc/init.d/ohasd deinstall

2021/04/10 18:09:03 CLSRSC-336: Successfully deconfigured Oracle Clusterware stack on this node

[root@exadb01:/u01/app/grid/12.2.0.1/grid/deinstall]/tmp/deinstall2021-04-10_06-01-55PM/perl/bin/perl -I/tmp/deinstall2021-04-10_06-01-55PM/perl/lib -I/tmp/deinstall2021-04-10_06-01-55PM/crs/install /tmp/deinstall2021-04-10_06-01-55PM/crs/install/rootcrs.pl -force -deconfig -paramfile "/tmp/deinstall2021-04-10_06-01-55PM/response/deinstall_OraGI12Home1.rsp" -lastnode

Using configuration parameter file: /tmp/deinstall2021-04-10_06-01-55PM/response/deinstall_OraGI12Home1.rsp

PRCR-1070 : Failed to check if resource ora.cvu is registered

CRS-0184 : Cannot communicate with the CRS daemon.

2021/04/10 18:10:10 CLSRSC-180: An error occurred while executing the command 'srvctl stop cvu -f' (error code 0)

PRCR-1070 : Failed to check if resource ora.cvu is registered

CRS-0184 : Cannot communicate with the CRS daemon.

2021/04/10 18:10:11 CLSRSC-180: An error occurred while executing the command 'srvctl remove cvu -f' (error code 0)

OC4J failed to stop

PRCR-1070 : Failed to check if resource ora.oc4j is registered

CRS-0184 : Cannot communicate with the CRS daemon.

OC4J could not be removed

PRCR-1070 : Failed to check if resource ora.oc4j is registered

CRS-0184 : Cannot communicate with the CRS daemon.

PRCR-1068 : Failed to query resources

CRS-0184 : Cannot communicate with the CRS daemon.

PRCR-1068 : Failed to query resources

CRS-0184 : Cannot communicate with the CRS daemon.

PRCR-1068 : Failed to query resources

CRS-0184 : Cannot communicate with the CRS daemon.

PRCR-1068 : Failed to query resources

CRS-0184 : Cannot communicate with the CRS daemon.

PRCR-1070 : Failed to check if resource ora.net1.network is registered

CRS-0184 : Cannot communicate with the CRS daemon.

PRCR-1070 : Failed to check if resource ora.helper is registered

CRS-0184 : Cannot communicate with the CRS daemon.

PRCR-1070 : Failed to check if resource ora.ons is registered

CRS-0184 : Cannot communicate with the CRS daemon.

CRS-4123: Oracle High Availability Services has been started.

CRS-2672: Attempting to start 'ora.evmd' on 'exadb01'

CRS-2672: Attempting to start 'ora.mdnsd' on 'exadb01'

CRS-2676: Start of 'ora.mdnsd' on 'exadb01' succeeded

CRS-2676: Start of 'ora.evmd' on 'exadb01' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'exadb01'

CRS-2676: Start of 'ora.gpnpd' on 'exadb01' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'exadb01'

CRS-2672: Attempting to start 'ora.gipcd' on 'exadb01'

CRS-2676: Start of 'ora.cssdmonitor' on 'exadb01' succeeded

CRS-2676: Start of 'ora.gipcd' on 'exadb01' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'exadb01'

CRS-2672: Attempting to start 'ora.diskmon' on 'exadb01'

CRS-2676: Start of 'ora.diskmon' on 'exadb01' succeeded

CRS-2674: Start of 'ora.cssd' on 'exadb01' failed

CRS-2679: Attempting to clean 'ora.cssd' on 'exadb01'

CRS-2681: Clean of 'ora.cssd' on 'exadb01' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'exadb01'

CRS-2677: Stop of 'ora.gipcd' on 'exadb01' succeeded

CRS-2673: Attempting to stop 'ora.cssdmonitor' on 'exadb01'

CRS-2677: Stop of 'ora.cssdmonitor' on 'exadb01' succeeded

CRS-2673: Attempting to stop 'ora.gpnpd' on 'exadb01'

CRS-2677: Stop of 'ora.gpnpd' on 'exadb01' succeeded

CRS-2673: Attempting to stop 'ora.mdnsd' on 'exadb01'

CRS-2677: Stop of 'ora.mdnsd' on 'exadb01' succeeded

CRS-2673: Attempting to stop 'ora.evmd' on 'exadb01'

CRS-2677: Stop of 'ora.evmd' on 'exadb01' succeeded

CRS-4000: Command Start failed, or completed with errors.

2021/04/10 18:10:54 CLSRSC-558: failed to deconfigure ASM

Died at /tmp/deinstall2021-04-10_06-01-55PM/crs/install/crsdeconfig.pm line 1039.

[root@exadb01:/u01/app/grid/12.2.0.1/grid/deinstall]

手工删除RAC

1 关库,关监听,关集群

2 删除grid 用户的oracle_home和oracle_base(/u01)

3 删除oracle用户的oracle_home和oracle_base(/u02)

4 删除/usr/local/bin/下的文件

rm -rf /usr/local/bin/dbhome

rm -rf /usr/local/bin/oraenv

rm -rf /usr/local/bin/coraenv

5 删除/etc下的配置文件

cd /etc/

rm -rf ora*

cd /etc/init

rm -rf oracle*

cd /etc/init.d/

rm -f init.ohasd

rm -f ohasd

rm -f init.tfa

6 删除/var/tmp/.oracle 集群注册信息

rm -f /var/tmp/.oracle

7 删除/tmp下安装临时信息

cd /tmp

rm -rf CVU*

rm -rf OraInstall*

8 格式化ASM磁盘组

9 是否有残余进程?

ps -ef | grep crs

ps -ef | grep ora

ps -ef | grep grid